Loading...

APIs form the backbone of communication between apps and services on the Internet. They are a quick way for an application to ask for data or ask that a task be performed by a service. For example, anyone can write a weather app without being a meteorologist: simply ask a weather API for the forecast and display it in your app.

Speed is inherent to the API use case. Rather than transferring bulky files like images and HTML, APIs only share the essential data needed to render a webpage or an app. However, despite their efficiency, Internet latency can still impede API data transfers. If the server processing a user’s API request is located far from that user, the network round trip time can degrade that user’s experience.

Cloudflare's global network is specifically designed to optimize and accelerate internet traffic, including APIs. Our users enjoy features like 11ms DNS responses, load balancing, and Argo Smart Routing, which significantly improve API traffic speed. For web content, Cloudflare customers have always been able to cache their web traffic, serving requests from the closest data center and thereby reducing network round trip time and server processing time to a bare minimum. Now, we are leveraging these benefits to enhance API traffic in exciting new ways.

Today we’re announcing Ricochet for API Gateway, the easiest way for Cloudflare customers to achieve faster API responses. Customers using Cloudflare’s API Gateway will be able to enable Ricochet for their API endpoints and automatically reduce average latency through intelligent caching of API requests that would otherwise go to origin. Ricochet will even work for things you previously thought un-cacheable, like GraphQL POST requests. Best of all, there are no changes to make at your origin. Just configure API Gateway with your API session identifiers and leave the rest to us.

Enabling Ricochet for your APIs will cause Cloudflare to cache many of the basic, repetitive API calls from your applications and deliver them to users faster than ever. At first, your product metrics might even look broken with lower latency and fewer requests at origin. But these metrics will be a new sign of success, reflecting your app’s new speedy user experience.

Why you should cache API responses

It isn’t news that page load times directly correlate to dollar spend of site visitors. Organizations have spent the last decade obsessing over static content optimization to deliver websites quicker every year. Faster apps result in higher business metrics for most web sites. For example, faster sites receive more sales orders and have higher customer loyalty. Faster sites are also critical for engagement during marketing campaigns. Caching API requests will make your sites and apps faster by lowering the amount of time required to populate your app with data for your users.

The tools for caching APIs have always been available. But why isn’t it more common? We hypothesize a few reasons. It could be that API developers assume that APIs are too dynamic to cache, and that it only helps after lots of analysis of the application’s performance. It could also be that the security concerns around caching APIs are non-trivial. If both of those are true, you can imagine how caching APIs would only be successful with a large cross-organizational approach and lots of effort.

Let’s say your organization decided to try API caching anyway. We know there are a few problems if you want to cache APIs: First, traditional caching methods aren’t necessarily ready out of the box for caching APIs; cache invalidation needs to happen quickly and automatically. Second, special standalone cache tooling exists, but it doesn’t help you if it’s placed next to the origin and your users are globally distributed. Lastly, it’s hard to get security right when caching user data. It’s strictly forbidden to serve one user’s data to another on accident. Cloudflare has superpowers in these areas: knowledge of origin response time and existing cache-hit ratios, customer-configured API session IDs to establish secure user association with API requests, and the scale of our global network. We’re bringing together API Management with our robust caching infrastructure to safely and automatically cache API requests.

Cloudflare’s unique approach

The HTTP methods POST, PUT, and DELETE aren't generally cacheable. These methods are meant to change information at the origin on a one-time basis, and are therefore “non-safe”. If you responded to a non-safe request from cache, the data on the API server would not be updated. Compare this with “safe” HTTP methods: GET, OPTIONS, and HEAD. Caching requests with safe methods is straightforward as data at the origin does not change per-request.

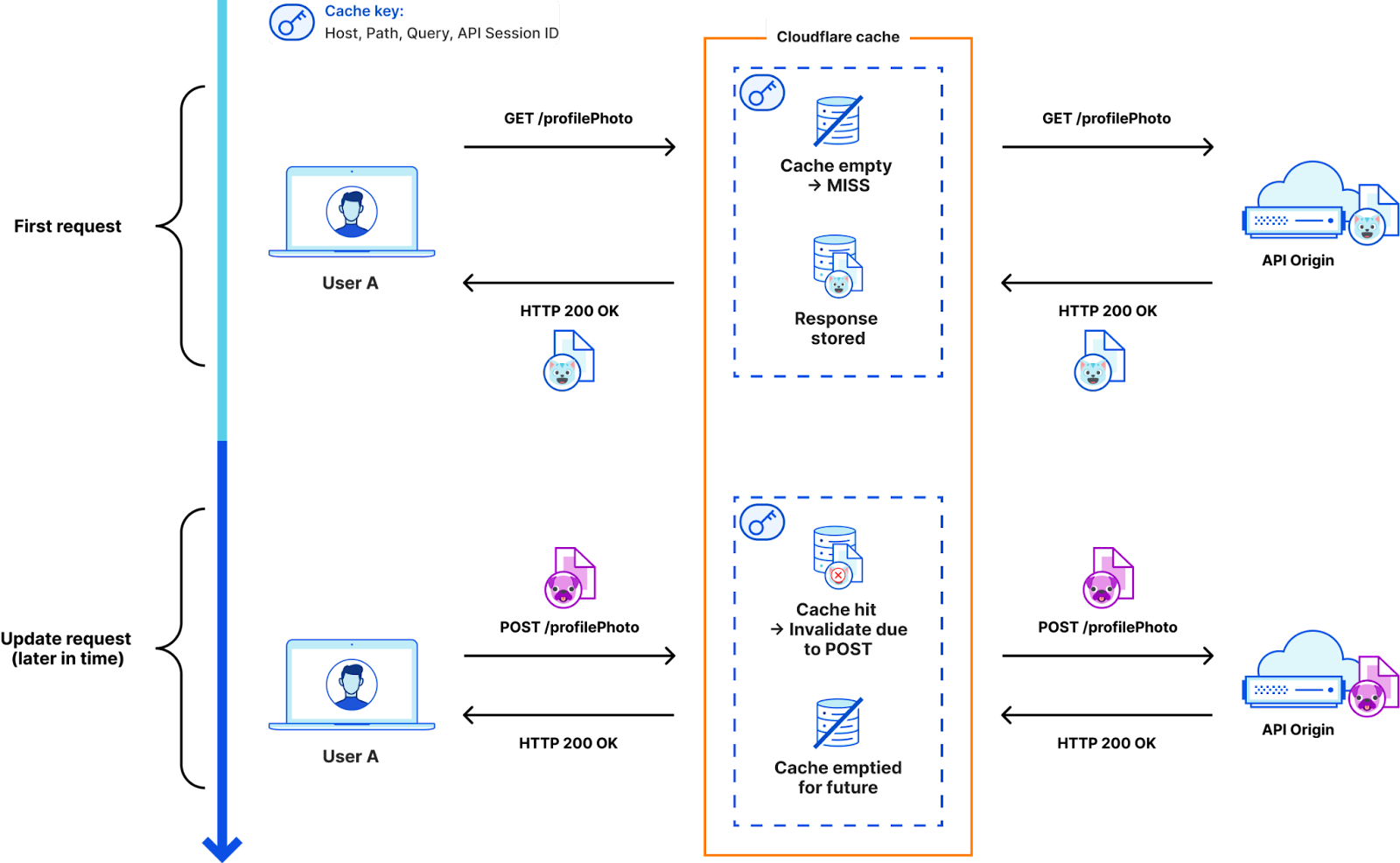

But what do we know about RESTful APIs that can make caching easier? The endpoints usually stay the same when operating on objects, but the methods change. We will enable caching for safe methods and then automatically invalidate the cache when we see a non-safe method request for a RESTful endpoint managed by API Gateway. It’s also possible you have updates on one endpoint that change another endpoint’s data. In that event, we urge you to consider whether API Gateway’s short default TTL timers fit your use case by allowing a small delay between updating data at the origin and serving that update from cache. Check out the below diagram for an example of how automatic cache invalidation would work for shared paths with different methods:

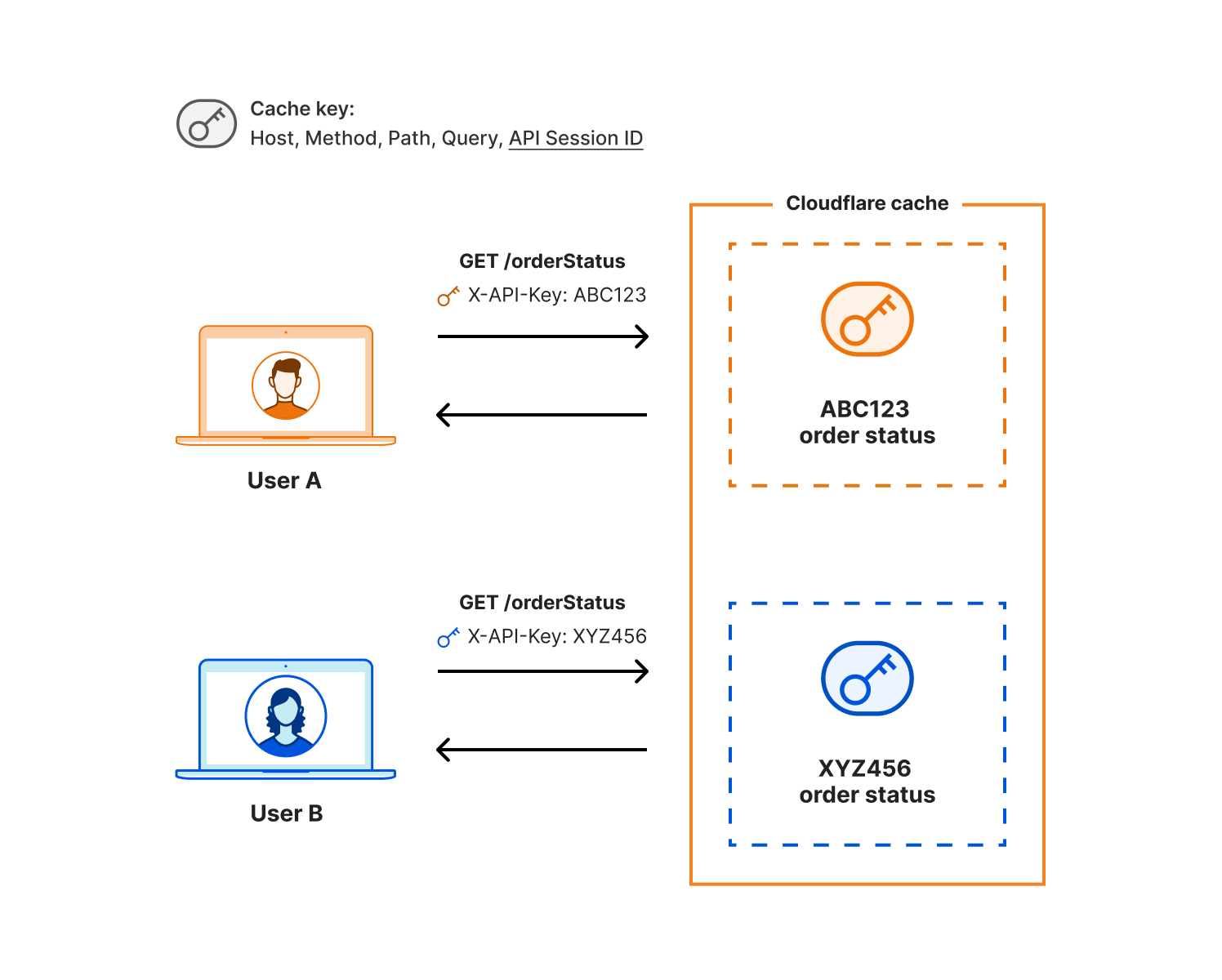

Even for safe requests, caching API data is risky when it comes to security. Done incorrectly, you could accidentally serve sensitive user data to the wrong person. That’s why Ricochet’s cache key includes the user’s API session identifier. This extra information in the cache key ensures that only an authorized user is able to receive their own cached data. For APIs without authentication, we hash the request parameters themselves and include that hash in the cache key, to ensure the correct data is returned for endpoints with static paths but variable inputs. Here’s an example for authenticated APIs:

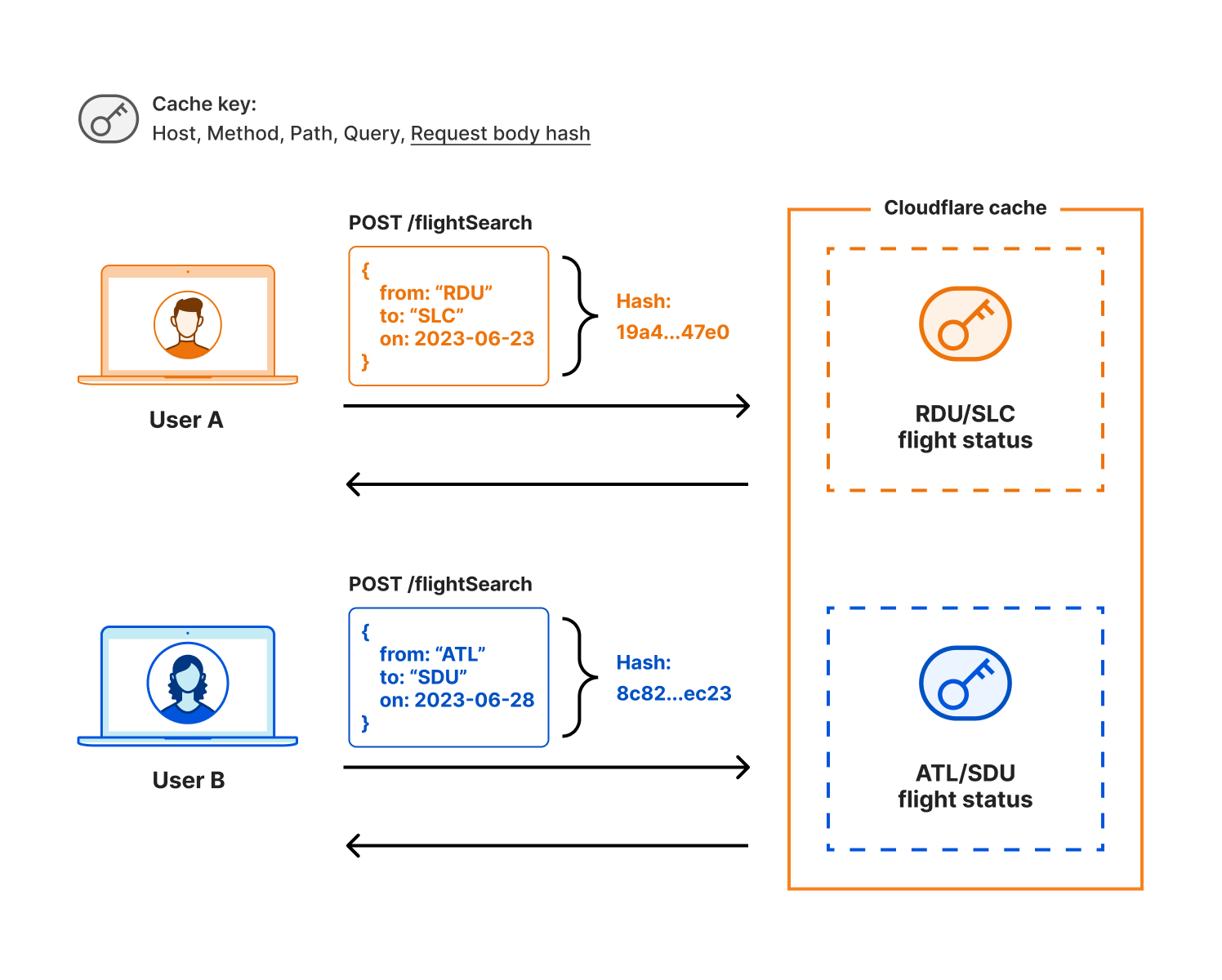

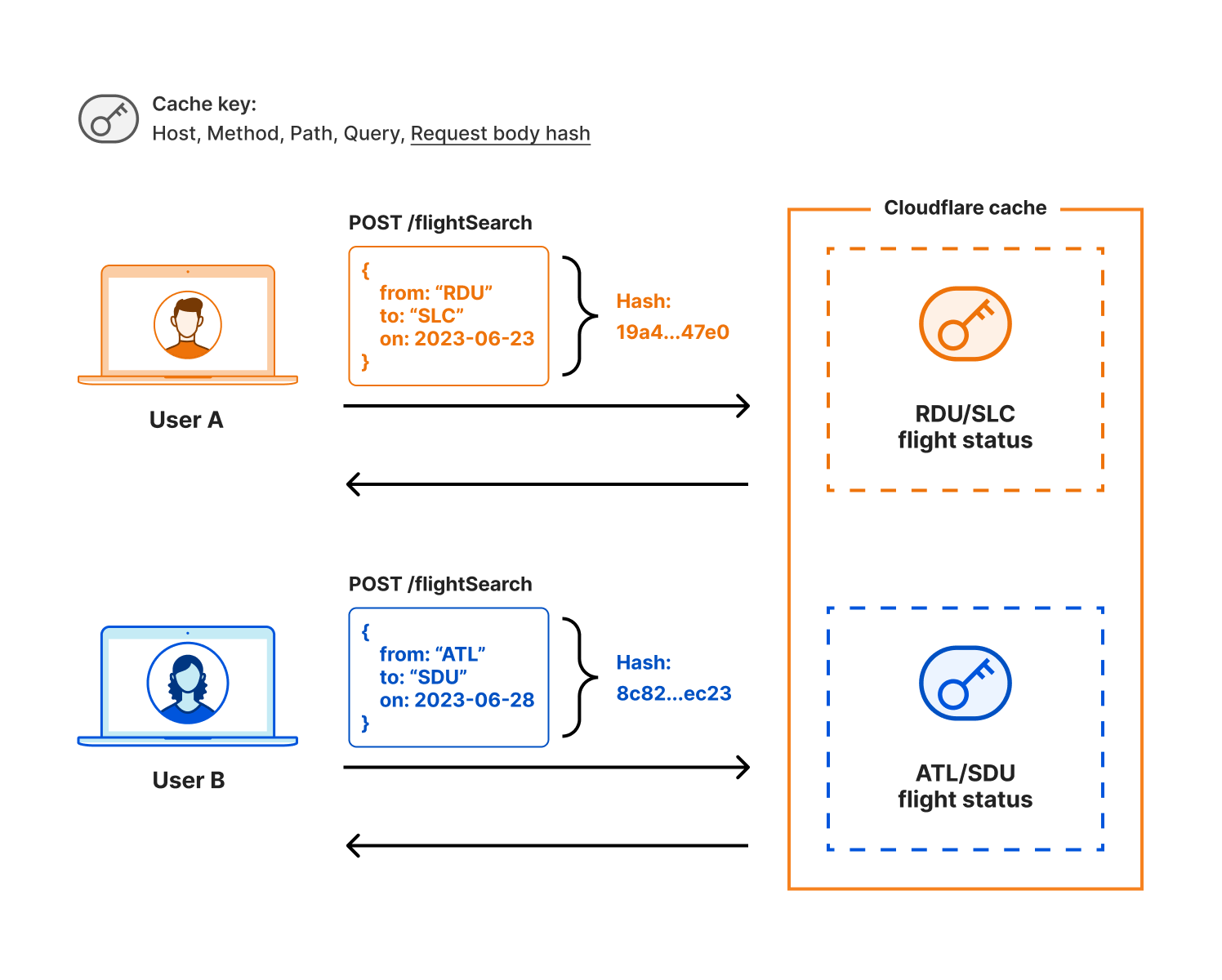

And here’s an example for anonymous APIs, where we use a hash of the request body to preserve privacy and still enable unique, useful cache keys:

APIs can be ripe for caching

There are many API caching use cases out there, and we decided to start with two where our customers have asked us for help so far: mixed-authentication APIs where returning the correct data is critical, and APIs that have single endpoints that can return varied query results (think RESTful endpoints with variable inputs and GraphQL POST requests). These use cases include things like weather forecasts and current conditions, airline flight tracking and flight status, live sports scores, and collaborative tools with many users.

Even short cache control timers can be beneficial to reduce load at the origin and speed up responses. Consider an example of a popular public endpoint receiving 1,000 requests/second, or 60,000 requests/min at origin. Let’s assume the data at the origin changes unpredictably but not due to unique user interaction. For this use case, reporting stale data for a few seconds or even a minute could be acceptable, and most users wouldn’t know or mind the difference. You could set your cache control to a very low 1 second and serve 999 requests/second from cache. That would reduce the origin requests to only 60 requests/minute!

This is a simple example, and we urge you to think about your API and the potential performance improvements caching could bring.

Potential impact of caching APIs

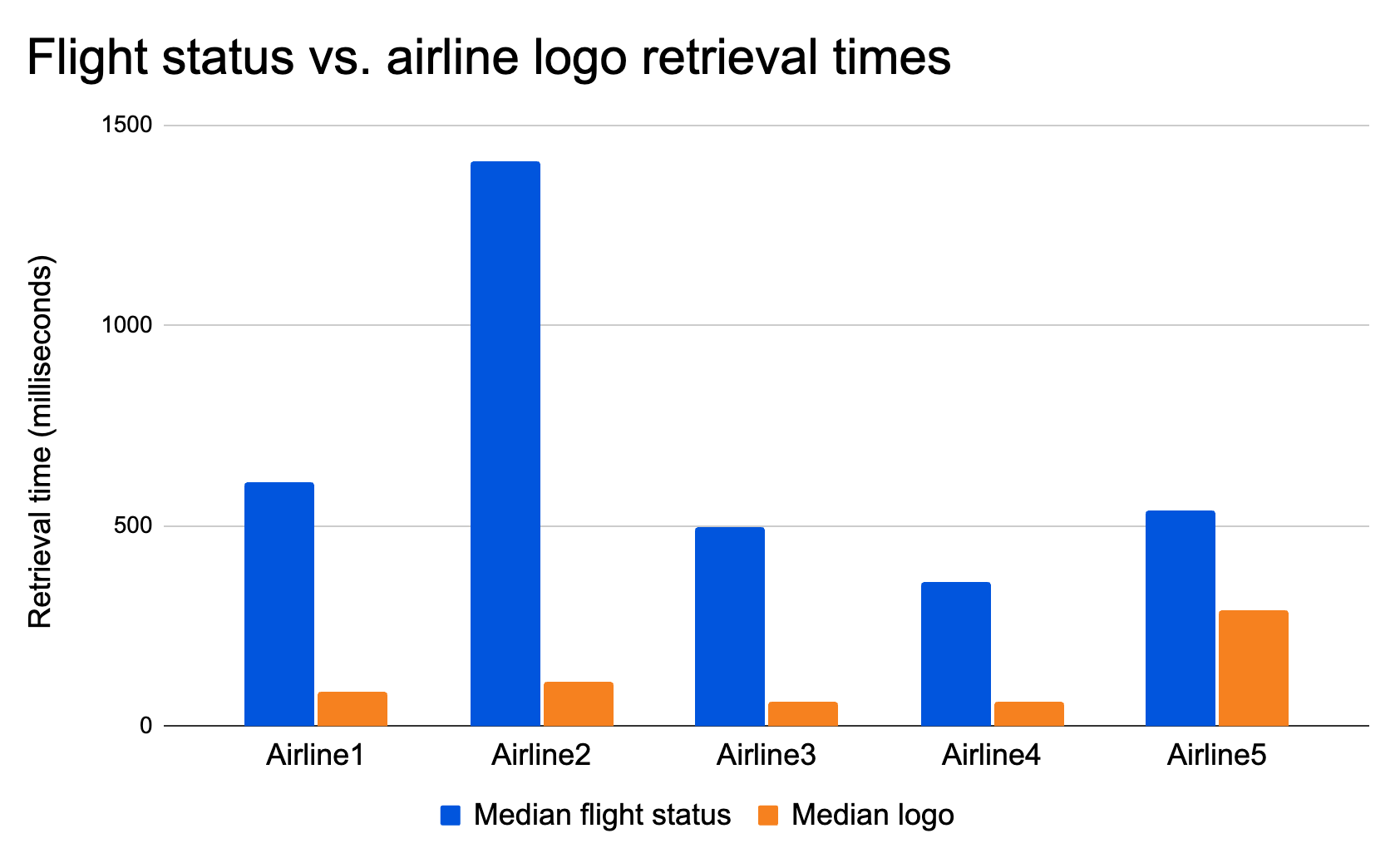

We profiled five top global airline website APIs for flight status checks and compared their retrieval time against the airline logo’s retrieval time. Here are the results:

All five airlines saw on average a ~7x slow down for data that could easily be cached!

Successful caching will also lower load on your API origin. The hidden benefit with lower load at origin means faster responses from the requests that miss the cache and do end up hitting origin. So it’s two-for-the-price-of-one and latency decreases all-around. We aren’t going to reinvent your API overnight, but we are going to make a difference in your application’s response times by making it easy to add caching to your APIs.

Conclusion

We're launching Ricochet in 2024. It’s going to make a measurable difference speeding up your APIs, and the best part is that as an API Gateway customer it will be easy to get started without requiring tons of your team’s time. Let your account team know if you’d like to be on our waitlist for this feature. Our goal is to increase the amount of API caching on the Internet so that we can all benefit from faster response times and snappier apps.

We protect entire corporate networks, help customers build Internet-scale applications efficiently, accelerate any website or Internet application, ward off DDoS attacks, keep hackers at bay, and can help you on your journey to Zero Trust.

Visit 1.1.1.1 from any device to get started with our free app that makes your Internet faster and safer.

To learn more about our mission to help build a better Internet, start here. If you're looking for a new career direction, check out our open positions.