Loading...

We’ve all experienced the frustrations of slow loading websites, or an app that seems to stall when it needs to call an API for an update. Anything less than instant and your mind wanders to something else...

One way to make things fast is to bring resources as close to the user as possible — this is what Cloudflare has been doing with compute by running within milliseconds of most of the world’s population. But, counterintuitive as it may seem, sometimes bringing compute closer to the user can actually slow applications down. If your application needs to connect to APIs, databases or other resources that aren’t located near the end user then it can be more performant to run the application near the resources instead of the user.

So today we’re excited to announce Smart Placement for Workers and Pages Functions, making every interaction as fast as possible. With Smart Placement, Cloudflare is taking serverless computing to the Supercloud by moving compute resources to optimal locations in order to speed up applications. The best part – it’s completely automatic, without any extra input (like the dreaded “region”) needed.

Smart Placement is available now, in open beta, to all Workers and Pages customers!

The serverless shift

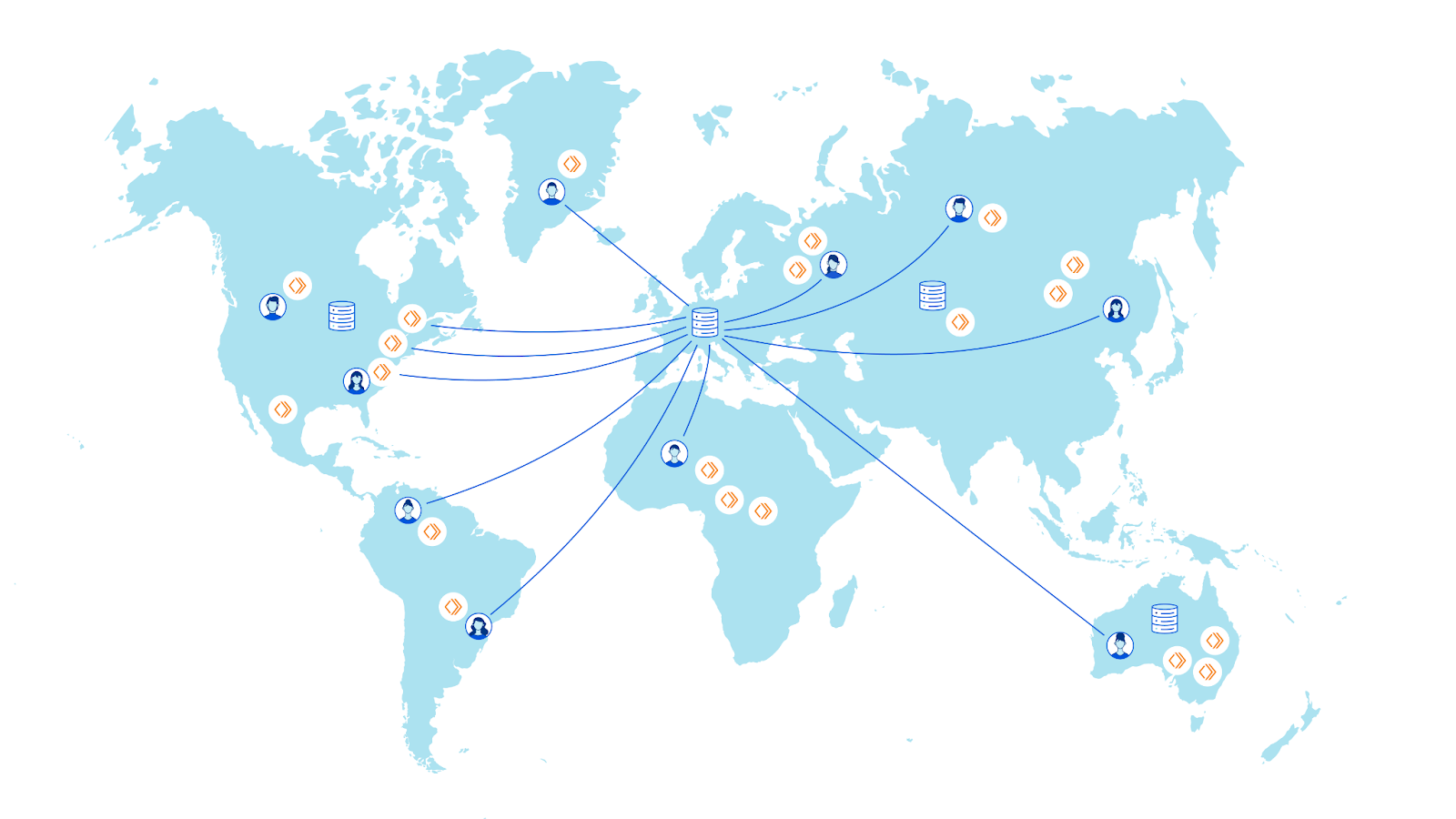

Cloudflare’s anycast network is built to process requests instantly and close to the user. As a developer, that’s what makes Cloudflare Workers, our serverless compute offering so compelling. Competitors are bounded by “regions” while Workers run everywhere — hence we have one region: Earth. Requests handled entirely by Workers can be processed right then and there, without ever having to hit an origin server.

While this concept of serverless was originally considered to be for lightweight tasks, serverless computing has been seeing a shift in recent years. It’s being used to replace traditional architecture, which relies on origin servers and self-managed infrastructure, instead of simply augmenting it. We’re seeing more and more of these use cases with Workers and Pages users.

Serverless needs state

With the shift to going serverless and building entire applications on Workers comes a need for data. Storing information about previous actions or events lets you build personalized, interactive applications. Say you need to create user profiles, store which page a user left off at, which SKUs a user has in their cart – all of these are mapped to data points used to maintain state. Backend services like relational databases, key-value stores, blob storage, and APIs all let you build stateful applications.

Cloudflare compute + storage: a powerful duo

We have our own growing suite of storage offerings: Workers KV, Durable Objects, D1, R2. As we’re maturing our data products, we think deeply about their interactions with Workers so that you don’t have to! For example, another approach that has better performance in some cases is moving storage, rather than compute close to users. If you’re using Durable Objects to create a real-time game we could move the Durable Objects to minimize latency for all users.

Our goal for the future state is that you set mode = "smart"and we evaluate the optimal placement of all your resources with no additional configuration needed.

Cloudflare compute + ${backendService}

Today, the primary use case for Smart Placement is when you’re using non-Cloudflare services like external databases or third party APIs for your applications.

Many backend services, whether they’re self-hosted or managed services, are centralized, meaning that data is stored and managed in a single location. Your users are global and Workers are global, but your backend is centralized.

If your code makes multiple requests to your backend services they could be crossing the globe multiple times, having a big hit on performance. Some services offer data replication and caching which help to improve performance, but also come with trade-offs like data consistency and higher costs that should be weighed against your use case.

The Cloudflare network is ~50ms from 95% of the world’s connected population. Turning this on its head, we're also very close to your backend services.

Application performance is user experience

Let’s understand how moving compute close to your backend services could decrease application latency by walking through an example:

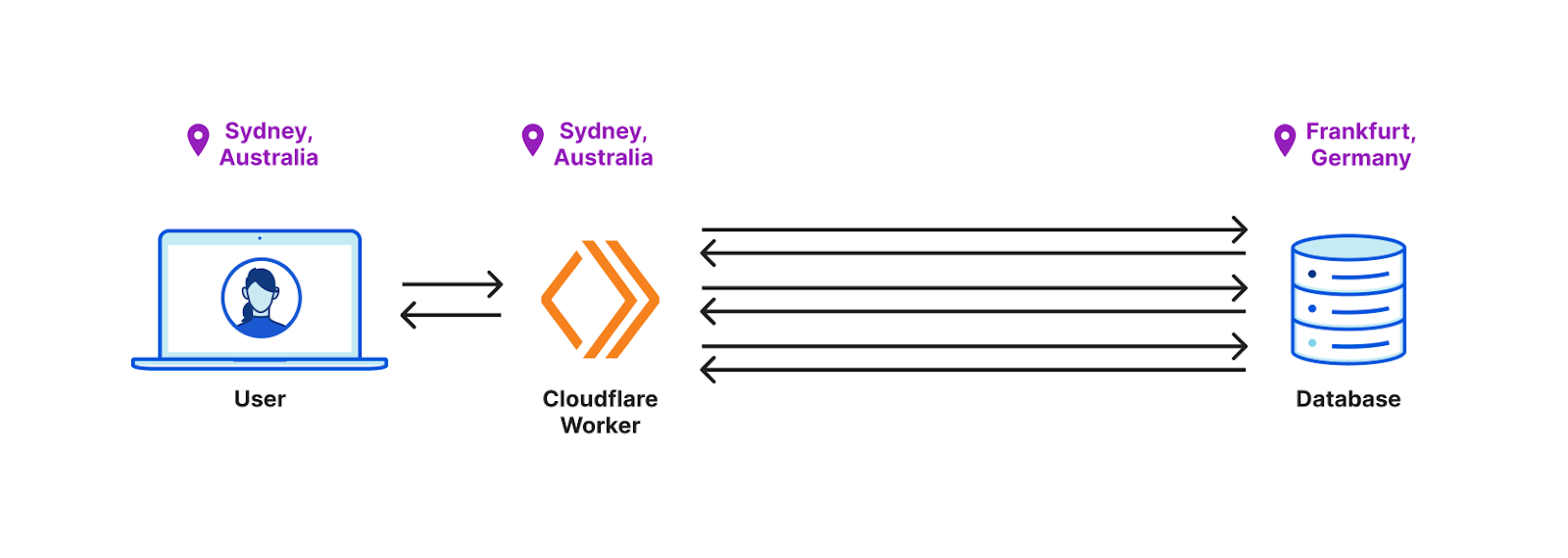

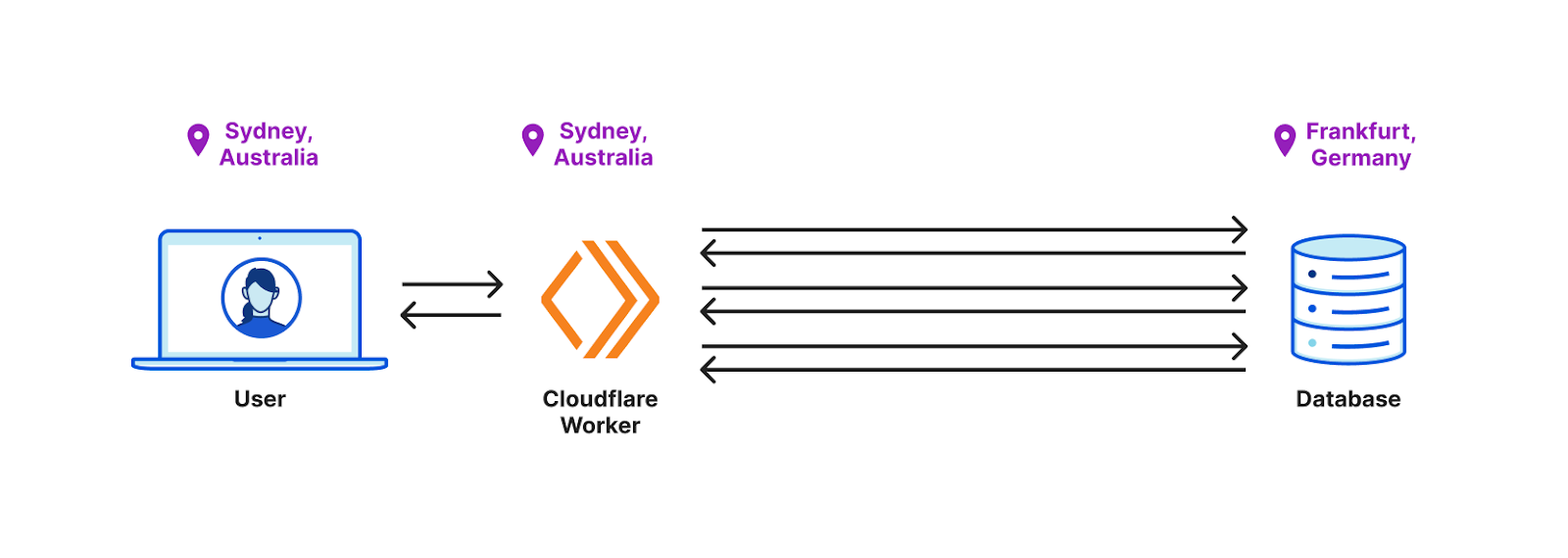

Say you have a user in Sydney, Australia who’s accessing an application running on Workers. This application makes three round trips to a database located in Frankfurt, Germany in order to serve the user’s request.

Intuitively, you can guess that the bottleneck is going to be the time that it takes the Worker to perform multiple round trips to your database. Instead of the Worker being invoked close to the user, what if it was invoked in a data center closest to the database instead?

Let’s put this to the test.

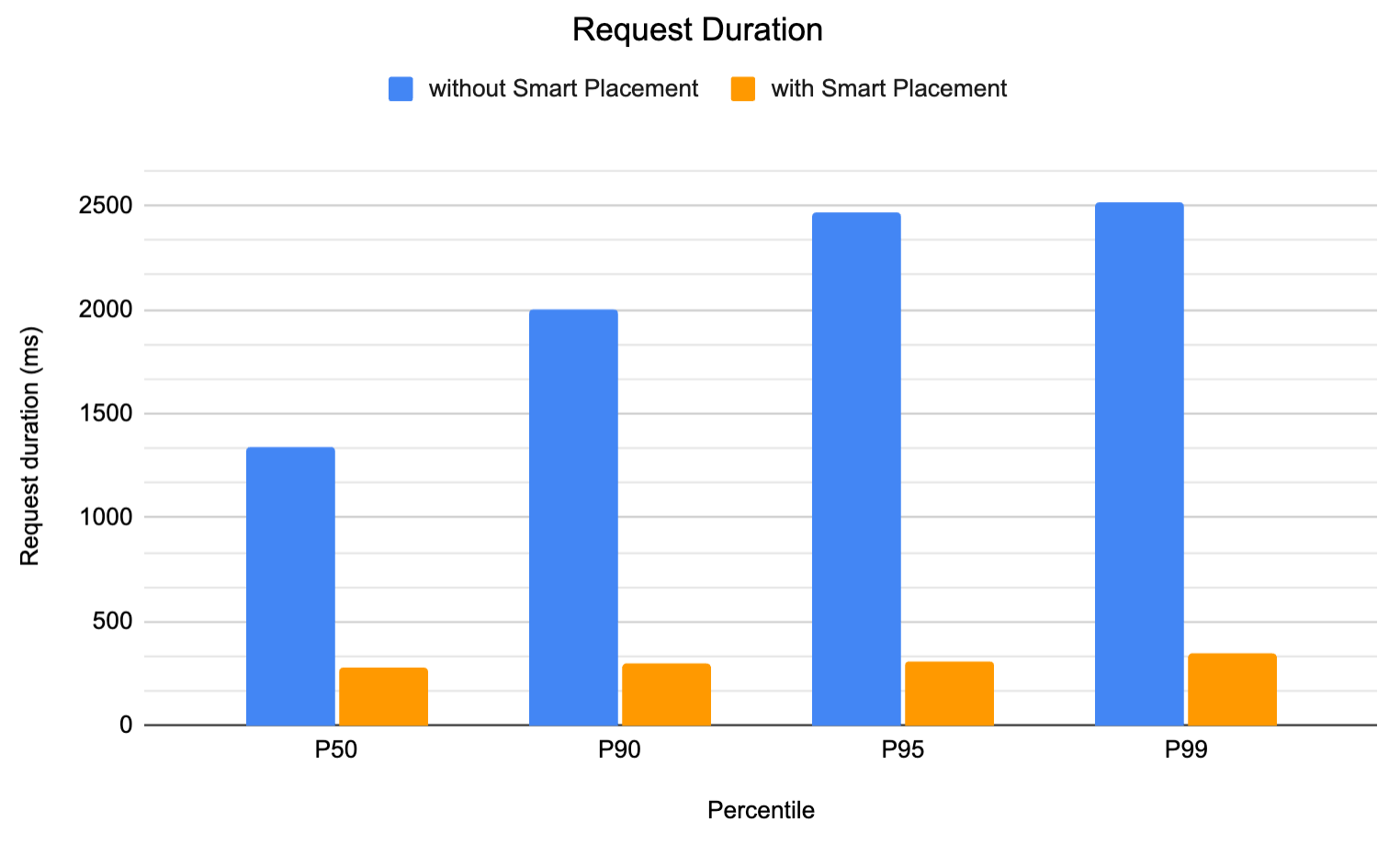

We measured the request duration for a Worker without Smart Placement and compared it to one with Smart Placement enabled. For both tests, we sent 3,500 requests from Sydney to a Worker which does three round trips to an Upstash instance (free-tier) located in eu-central-1 (Frankfurt).

The results are clear! In this example, moving the Worker close to the backend improved application performance by 4-8x.

Network decisions shouldn’t be human decisions

As a developer, you should focus on what you do best – building applications – without needing to worry about the network decisions that will make your application faster.

Cloudflare has a unique vantage point: our network gathers intelligence around the optimal paths between users, Cloudflare data centers and back-end servers – we have lots of experience in this area with Argo Smart Routing. Smart Placement takes these factors into consideration to automatically place your Worker in the best spot to minimize overall request duration.

So, how does Smart Placement work?

Smart Placement can be enabled on a per-Worker basis under the “Settings” tab or in your wrangler.toml file:

[placement]

mode = "smart"

Once you enable Smart Placement on your Worker or Pages Function, the Smart Placement algorithm analyzes fetch requests (also known as subrequests) that your Worker is making in real time. It then compares these to latency data aggregated by our network. If we detect that on average your Worker makes more than one subrequest to a back-end resource, then your Worker will automatically get invoked from the optimal data center!

There are some back-end services that, for good reason, are not considered by the Smart Placement algorithm:

- Globally distributed services: If the services that your Worker communicates with are geo-distributed in many regions, Smart Placement isn’t a good fit. We automatically rule these out of the Smart Placement optimization.

- Analytics or logging services: Requests to analytics or logging services don’t need to be in the critical path of your application.

waitUntil()should be used so that the response back to users isn’t blocked when instrumenting your code. SincewaitUntil()doesn’t impact the request duration from a user’s perspective, we automatically rule analytics/logging services out of the Smart Placement optimization.

Refer to our documentation for a list of services not considered by the Smart Placement algorithm.

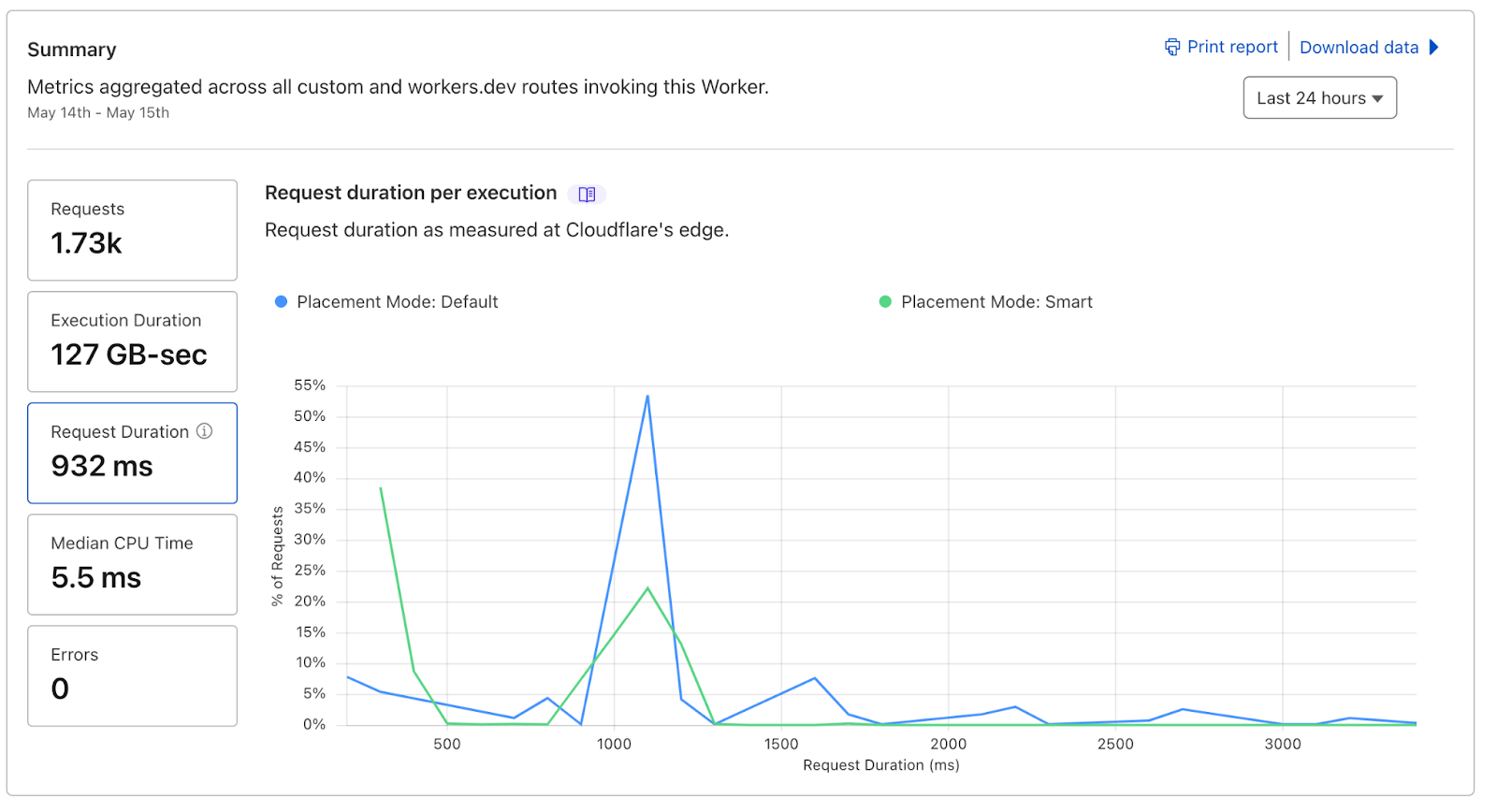

Once Smart Placement kicks in, you’ll be able to see a new “Request Duration” tab on your Worker. We route 1% of requests without Smart Placement enabled so that you can see its impact on request duration.

And yes, it is really that easy!

Try out Smart Placement by checking out our demo (it’s a lot of fun to play with!). To learn more, visit our developer documentation.

What’s next for Smart Placement?

We’re only getting started! We have lots of ideas on how we can improve Smart Placement:

- Support for calculating the optimal location when the application uses multiple back-ends

- Fine-tuned placement (e.g. if your Worker uses multiple back-ends depending on the path. We calculate the optimal placement per path instead of per-Worker)

- Support for TCP based connections

We would like to hear from you! If you have feedback or feature requests, reach out through the Cloudflare Developer Discord.

We protect entire corporate networks, help customers build Internet-scale applications efficiently, accelerate any website or Internet application, ward off DDoS attacks, keep hackers at bay, and can help you on your journey to Zero Trust.

Visit 1.1.1.1 from any device to get started with our free app that makes your Internet faster and safer.

To learn more about our mission to help build a better Internet, start here. If you're looking for a new career direction, check out our open positions.