Loading...

The Cloudflare Workers' ecosystem now features products and features ranging from compute, hosting, storage, databases, streaming, networking, security, and much more. Over time, we've been trying to inspire others to switch from traditional software architectures, proving and documenting how it's possible to build complex applications that scale globally on top of our stack.

Today, we're excited to welcome Constellation to the Cloudflare stack, enabling developers to run pre-trained machine learning models and inference tasks on Cloudflare's network.

One more building block in our Supercloud

Machine learning and AI have been hot topics lately, but the reality is that we have been using these technologies in our daily lives for years now, even if we do not realize it. Our mobile phones, computers, cars, and home assistants, to name a few examples, all have AI. It's everywhere.

But it isn't a commodity to developers yet, though. They often need to understand the mathematics behind it, the software and tools are dispersed and complex, and the hardware or cloud services to run the frameworks and data are expensive.

Today we're introducing another feature to our stack, allowing everyone to run machine learning models and perform inference on top of Cloudflare Workers.

Introducing Constellation

Constellation allows you to run fast, low-latency inference tasks using pre-trained machine learning models natively with Cloudflare Workers scripts.

Some examples of applications that you can deploy leveraging Constellation are:

- Image or audio classification or object detection

- Anomaly Detection in Data

- Text translation, summarization, or similarity analysis

- Natural Language Processing

- Sentiment analysis

- Speech recognition or text-to-speech

- Question answering

Developers can upload any supported model to Constellation. They can train them independently or download pre-trained models from machine learning hubs like HuggingFace or ONNX Zoo.

However, not everyone will want to train models or browse the Internet for models they didn't test yet. For that reason, Cloudflare will also maintain a catalog of verified and ready-to-use models.

We built Constellation with a great developer experience and simple-to-use APIs in mind. Here's an example to get you started.

Image classification application

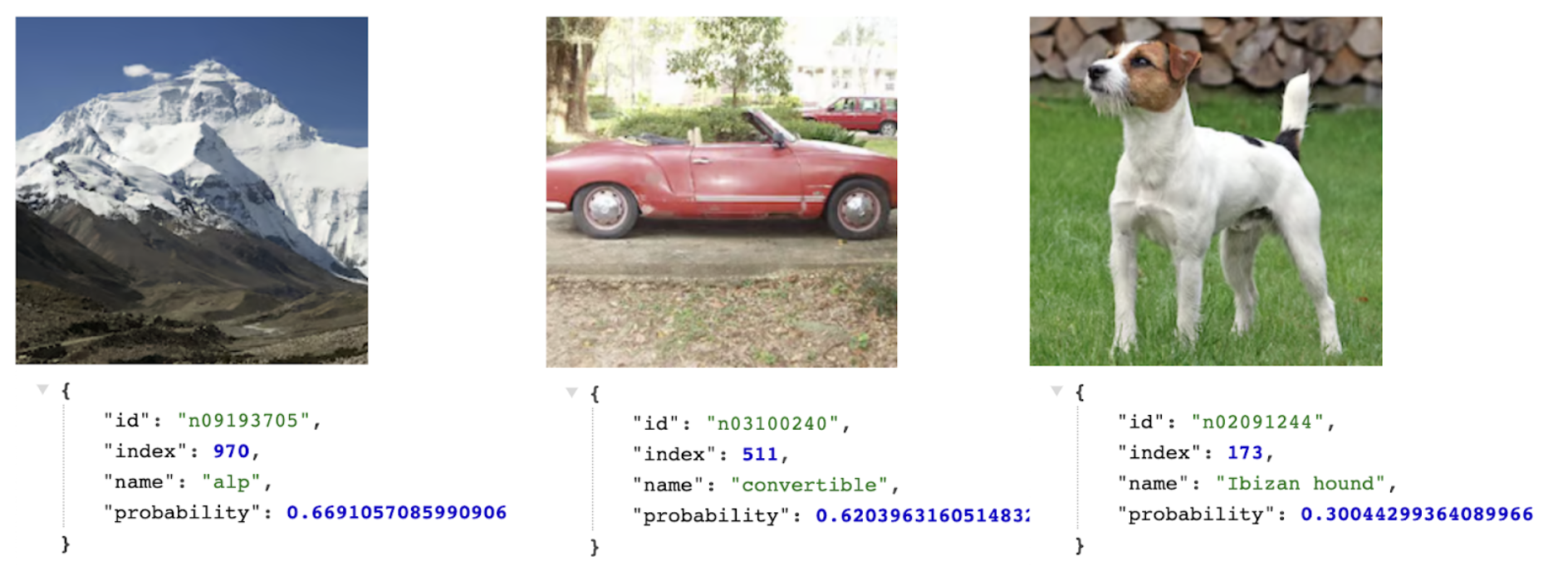

In this example, we will build an image classification app powered by the Constellation inference API and the SqueezeNet model, a convolutional neural network (CNN) that was pre-trained on more than one million images from the open-source ImageNet database and can classify images into no more than 1,000 categories.

SqueezeNet compares to AlexNet, one of the original CNNs and benchmarks for image classification, by being much faster (~3x) and much smaller (~500x) while still achieving similar levels of accuracy. Its small footprint makes it ideal for running on portable devices with limited resources or custom hardware.

First, let's create a new Constellation project using the ONNX runtime. Wrangler now has functionality for Constellation built-in with the constellation keyword.

$ npx wrangler constellation project create "image-classifier" ONNX

Now let’s create the wrangler.toml configuration file with the project binding:

# Top-level configuration

name = "image-classifier-worker"

main = "src/index.ts"

compatibility_date = "2022-07-12"

constellation = [

{

binding = 'CLASSIFIER',

project_id = '2193053a-af0a-40a6-b757-00fa73908ef6'

},

]

Installing the Constellation client API library:

$ npm install @cloudflare/constellation --save-dev

Upload the pre-trained SqueezeNet 1.1 ONNX model to the project.

$ wget https://github.com/microsoft/onnxjs-demo/raw/master/docs/squeezenet1_1.onnx

$ npx wrangler constellation model upload "image-classifier" "squeezenet11" squeezenet1_1.onnx

As we said above, SqueezeNet classifies images into no more than 1,000 object classes. These classes are actually in the form of a list of synonym rings or synsets. A synset has an id and a label; it derives from Princeton's WordNet database terminology, the same used to label the ImageNet image database.

To translate SqueezeNet's results into human-readable image classes, we need a file that maps the synset ids (what we get from the model) to their corresponding labels.

$ mkdir src; cd src

$ wget https://raw.githubusercontent.com/microsoft/onnxjs-demo/master/src/data/imagenet.ts

And finally, let’s code and deploy our image classification script:

import { imagenetClasses } from './imagenet';

import { Tensor, run } from '@cloudflare/constellation';

export interface Env {

CLASSIFIER: any,

}

export default {

async fetch(request: Request, env: Env, ctx: ExecutionContext) {

const formData = await request.formData();

const file = formData.get("file");

const data = await file.arrayBuffer();

const result = await processImage(env, data);

return new Response(JSON.stringify(result));

},

};

async function processImage(env: Env, data: ArrayBuffer) {

const input = await decodeImage(data)

const tensorInput = new Tensor("float32", [1, 3, 224, 224], input)

const output = await run(env.CLASSIFIER, "MODEL-UUID", tensorInput);

const probs = output.squeezenet0_flatten0_reshape0.value

const softmaxResult = softmax(probs)

const results = imagenetClasses(softmaxResult, 5);

const topResult = results[0];

return topResult

}

This script reads an image from the request, decodes it into a multidimensional float32 tensor (right now we only decode PNGs, but we can add other formats), feeds it to the SqueezeNet model running in Constellation, gets the results, matches them with the ImageNet classes list, and returns the human-readable tags for the image.

Pretty simple, no? Let’s test it:

$ curl https://ai.cloudflare.com/demos/image-classifier -F [email protected]/mountain.png | jq .name

alp

$ curl https://ai.cloudflare.com/demos/image-classifier -F [email protected]/car.png | jq .name

convertible

$ curl https://ai.cloudflare.com/demos/image-classifier -F [email protected]/dog.png | jq .name

Ibizan hound

You can see the probabilities in action here. The model is quite sure about the Alp and the Convertible, but the Ibizan hound has a lower probability. Indeed, the dog in the picture is from another breed.

This small app demonstrates how easy and fast you can start using machine learning models and Constellation when building applications on top of Workers. Check the full source code here and deploy it yourself.

Transformers

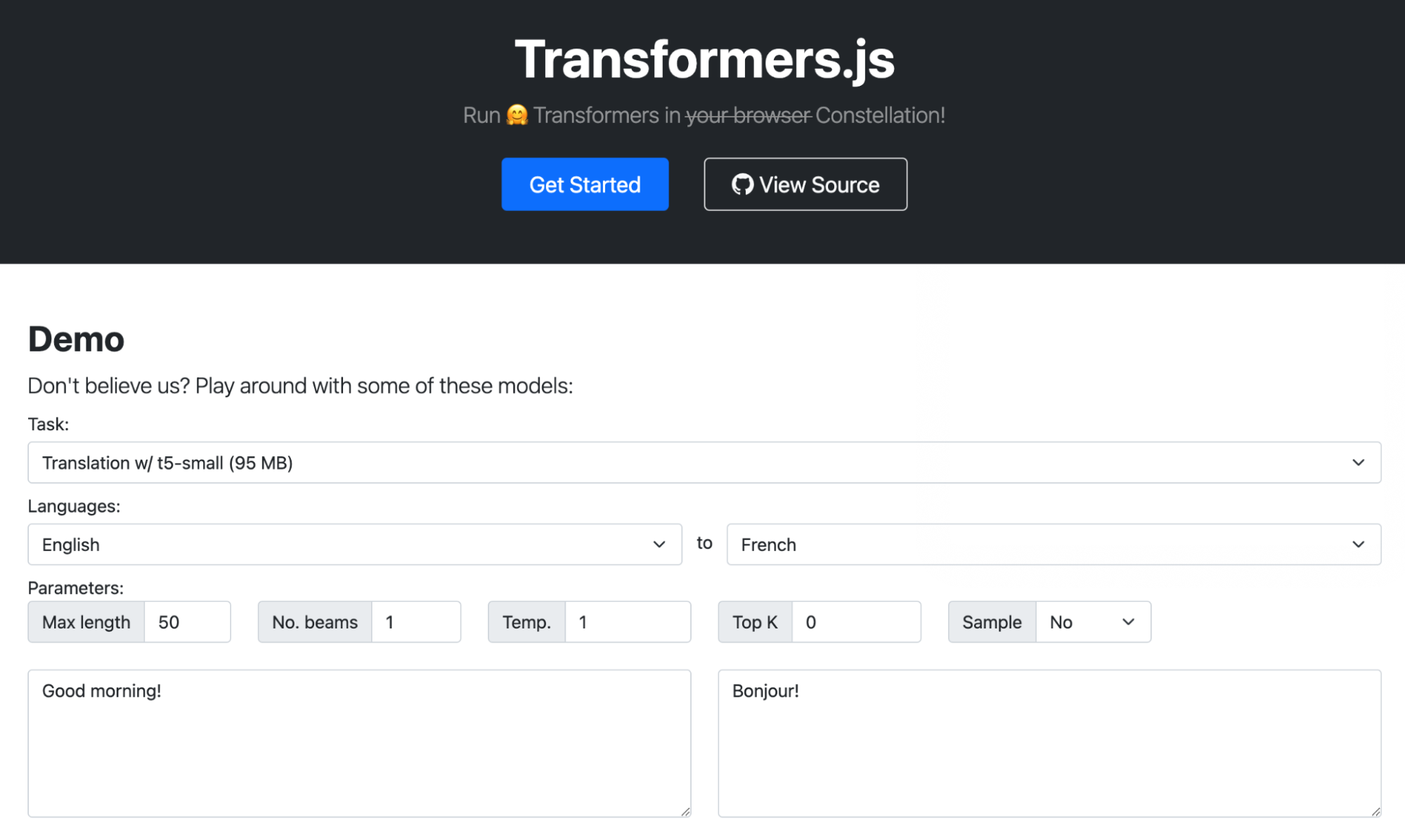

Transformers were introduced by Google; they are deep-learning models designed to process sequential input data and are commonly used for natural language processing (NLP), like translations, summarizations, or sentiment analysis, and computer vision (CV) tasks, like image classification.

Transformers.js is a popular demo that loads transformer models from HuggingFace and runs them inside your browser using the ONNX Runtime compiled to WebAssembly. We ported this demo to use Constellation APIs instead.

Here's the link to our version: https://transformers-js.pages.dev/

Interoperability with Workers

The other interesting element of Constellation is that because it runs natively in Workers, you can orchestrate it with other products and APIs in our stack. You can use KV, R2, D1, Queues, anything, even Email.

Here's an example of a Worker that receives Emails for your domain on Cloudflare using Email Routing, runs Constellation using the t5-small sentiment analysis model, adds a header with the resulting score, and forwards it to the destination address.

import { Tensor, run } from '@cloudflare/constellation';

import * as PostalMime from 'postal-mime';

export interface Env {

SENTIMENT: any,

}

export default {

async email(message, env, ctx) {

const rawEmail = await streamToArrayBuffer(event.raw, event.rawSize);

const parser = new PostalMime.default();

const parsedEmail = await parser.parse(rawEmail);

const input = tokenize(parsedEmail.text)

const output = await run( env.SENTIMENT, "MODEL-UUID", input);

var headers = new Headers();

headers.set("X-Sentiment", idToLabel[output.label]);

await message.forward("[email protected]", headers);

}

}

Now you can use Gmail or any email client to apply a rule to your messages based on the 'X-Sentiment' header. For example, you might want to move all the angry emails outside your Inbox to a different folder on arrival.

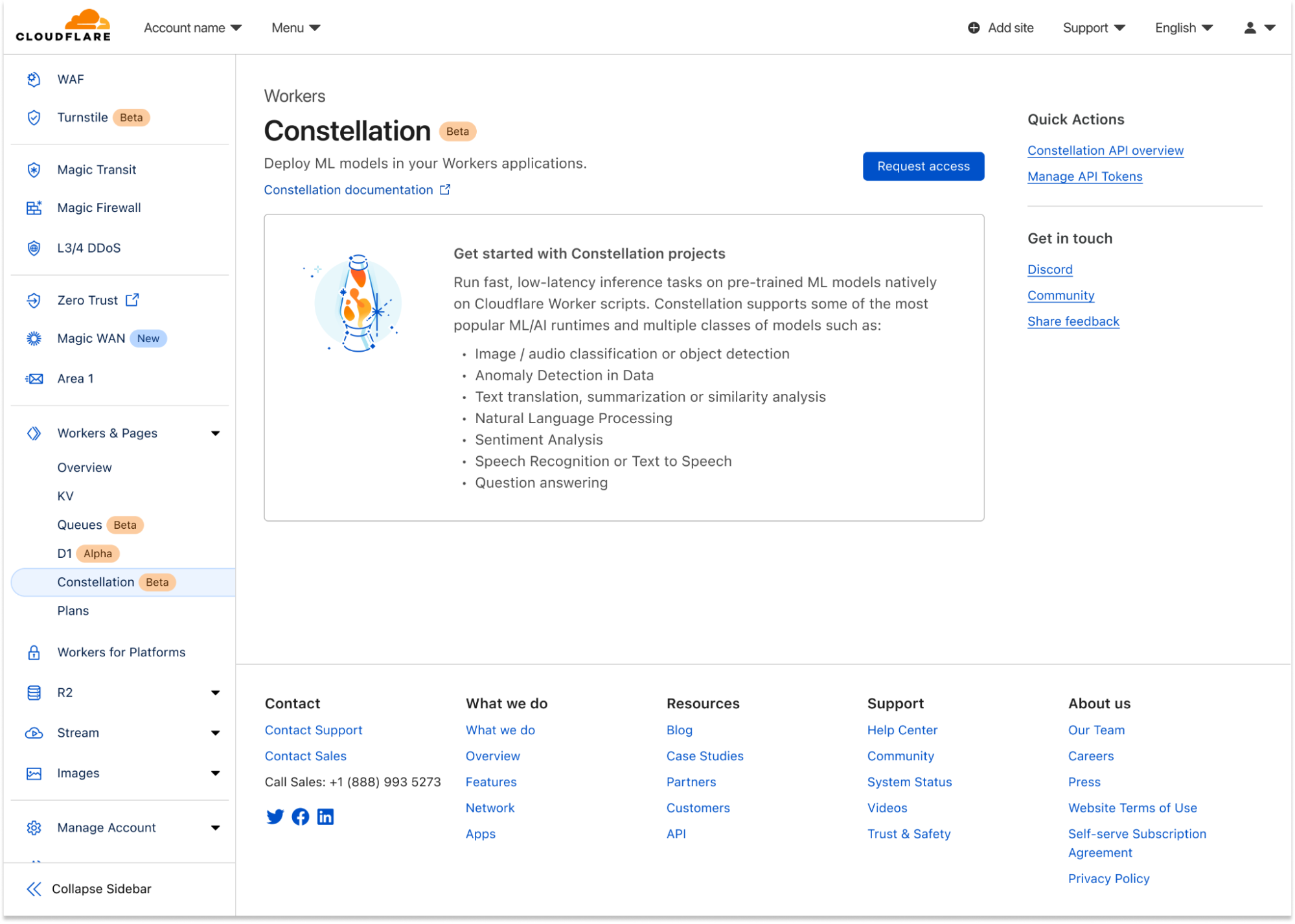

Start using Constellation

Constellation starts today in private beta. To join the waitlist, please head to the dashboard, click the Workers tab under your account, and click the "Request access" button under the Constellation entry. The team will be onboarding accounts in batches; you'll get an email when your account is enabled.

In the meantime, you can read our Constellation Developer Documentation and learn more about how it works and the APIs. Constellation can be used from Wrangler, our command-line tool for configuring, building, and deploying applications with Cloudflare developer products, or managed directly in the Dashboard UI.

We are eager to learn how you want to use ML/AI with your applications. Constellation will keep improving with higher limits, more supported runtimes, and larger models, but we want to hear from you. Your feedback will certainly influence our roadmap decisions.

One last thing: today, we've been talking about how you can write Workers that use Constellation, but here's an inception fact: Constellation itself was built using the power of WebAssembly, Workers, R2, and our APIs. We'll make sure to write a follow-up blog soon about how we built it; stay tuned.

As usual, you can talk to us on our Developers Discord (join the #constellation channel) or the Community forum; the team will be listening.

We protect entire corporate networks, help customers build Internet-scale applications efficiently, accelerate any website or Internet application, ward off DDoS attacks, keep hackers at bay, and can help you on your journey to Zero Trust.

Visit 1.1.1.1 from any device to get started with our free app that makes your Internet faster and safer.

To learn more about our mission to help build a better Internet, start here. If you're looking for a new career direction, check out our open positions.