Loading...

More than ten years ago, researchers at Google published a paper with the seemingly heretical title “More Bandwidth Doesn’t Matter (much)”. We published our own blog showing it is faster to fly 1TB of data from San Francisco to London than it is to upload it on a 100 Mbps connection. Unfortunately, things haven’t changed much. When you make purchasing decisions about home Internet plans, you probably consider the bandwidth of the connection when evaluating Internet performance. More bandwidth is faster speed, or so the marketing goes. In this post, we’ll use real-world data to show both bandwidth and – spoiler alert! – latency impact the speed of an Internet connection. By the end, we think you’ll understand why Cloudflare is so laser focused on reducing latency everywhere we can find it.

First, we should quickly define bandwidth and latency. Bandwidth is the amount of data that can be transmitted at any single time. It’s the maximum throughput, or capacity, of the communications link between two servers that want to exchange data. Usually, the bottleneck – the place in the network where the connection is constrained by the amount of bandwidth available – is in the “last mile”, either the wire that connects a home, or the modem or router in the home itself.

If the Internet is an information superhighway, bandwidth is the number of lanes on the road. The wider the road, the more traffic can fit on the highway at any time. Bandwidth is useful for downloading large files like operating system updates and big game updates. While bandwidth, throughput and capacity are synonyms, confusingly “speed” has come to mean bandwidth when talking about Internet plans. More on this later.

We use bandwidth when streaming video, though probably less than you think. Netflix recommends 15 Mbps of bandwidth to watch a stream in 4K/Ultra HD. A 1 Gbps connection could stream more than 60 Netflix shows in 4K at the same time!

Latency, on the other hand, is how fast data is moving through the Internet. To extend our analogy, tatency is the speed at which traffic is moving on the highway. If traffic is moving quickly, you’ll get to your destination faster. Latency is measured in the number of milliseconds that it takes a packet of data to go from a client (such as your laptop computer) to a server, and then back to the client. If you’re practicing tennis against a wall, round-trip latency is the time the ball was in the air. On the Internet “backbone” data is traveling at almost 200,000 kilometers per second as it bounces off the glass on the inside of fiber optic wires. That’s fast!

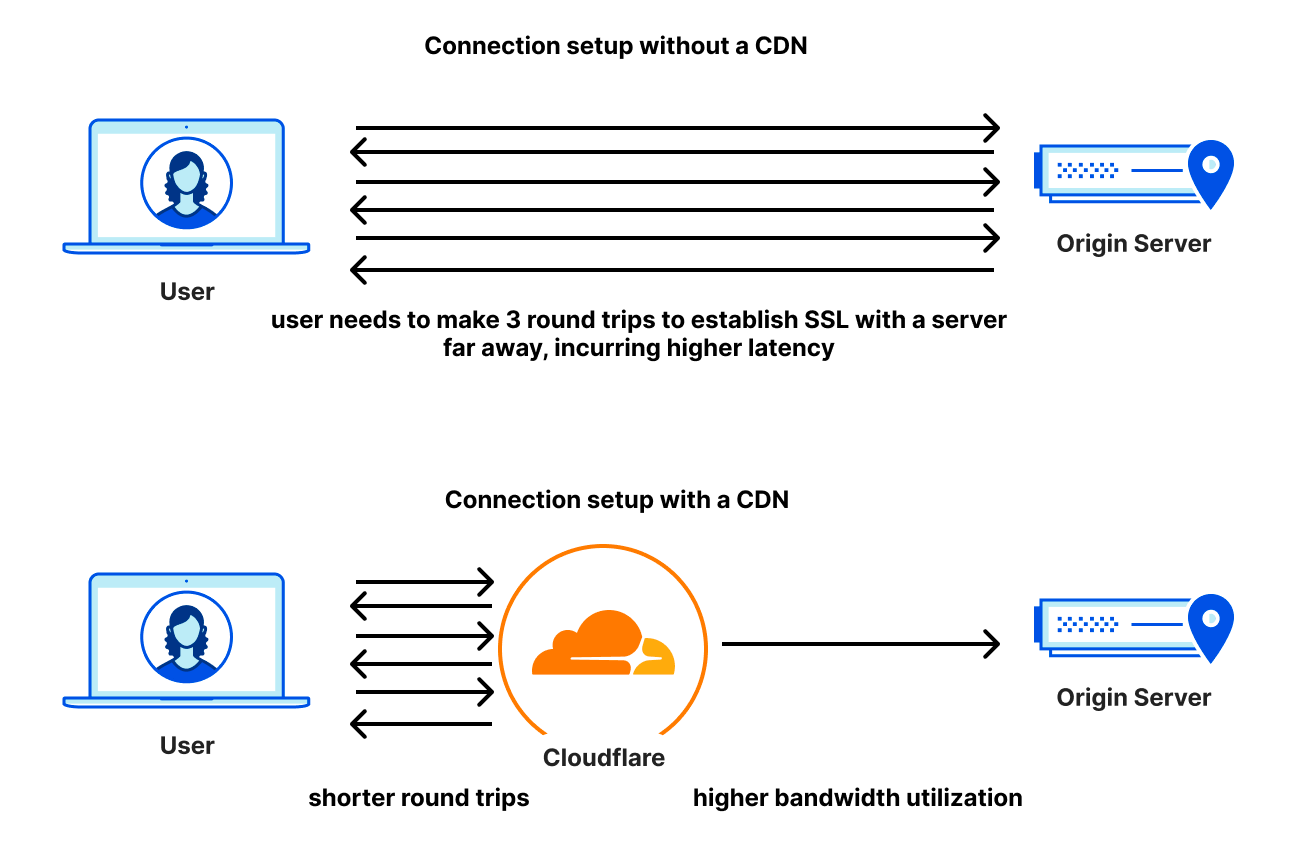

While we can’t make light travel through glass much faster, we can improve latency by moving the content closer to users, shortening the distance data needs to travel. That’s the effect of our presence in more than 285 cities globally: when you’re on the Internet superhighway trying to reach Cloudflare, we want to be just off the next exit.

We know that low-latency (low delay; high speed) connections are important for gaming, where tiny bits of data, such as the change in position of players in a game, need to reach another computer quickly. And increasingly, we’re becoming aware of high latency when it makes our videoconferencing choppy and unpleasant.

But we don’t use the Internet only to play games, nor only watch streaming video. We do those, and we visit a lot of normal web pages in between.

In the 2010 paper from Google, the author simulated loading web pages while varying the throughput and latency of the connection. What he finds is that above about 5 Mbps, the page doesn’t load much faster. Increasing bandwidth from 1 Mbps to 2 Mbps is almost a 40 percent improvement in page load time. From 5 Mbps to 6 Mbps is less than a 5 percent improvement.

When he varies the latency (the Round Trip Time, or RTT), something interesting happens: there is a linear return to better latency. For every 20 milliseconds of reduced latency, the page load time improves by about 10%.

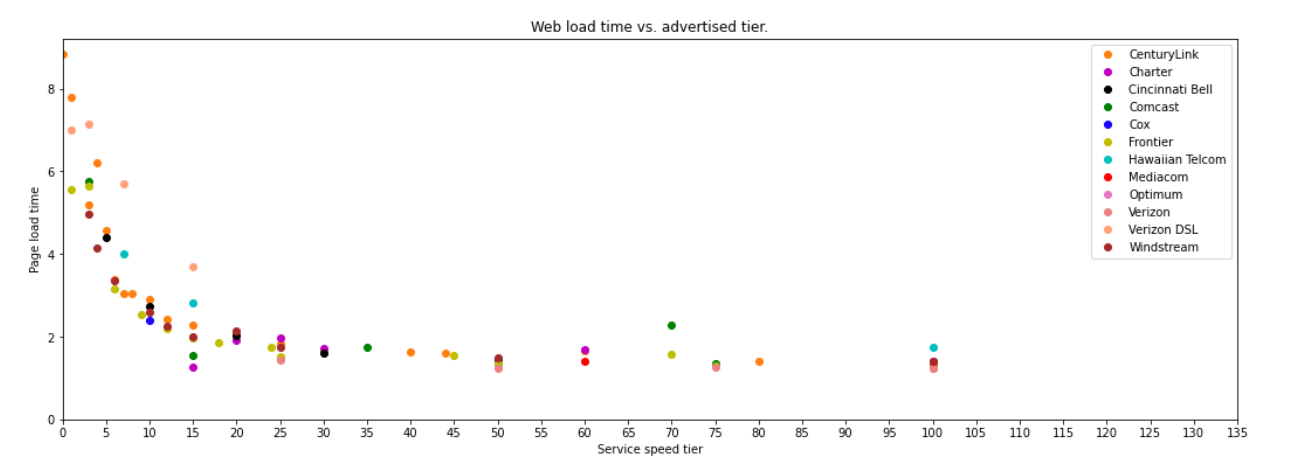

Let’s see what this looks like in real life with empirical data. Below is a chart from an excellent recent paper by two researchers from MIT. Using data from the FCC’s Measuring Broadband America program, these researchers produce a similar chart to what we expect from the 2010 simulation. Though the point of diminishing returns to more bandwidth has moved higher – to about 20 Mbps – the overall trend is exactly the same.

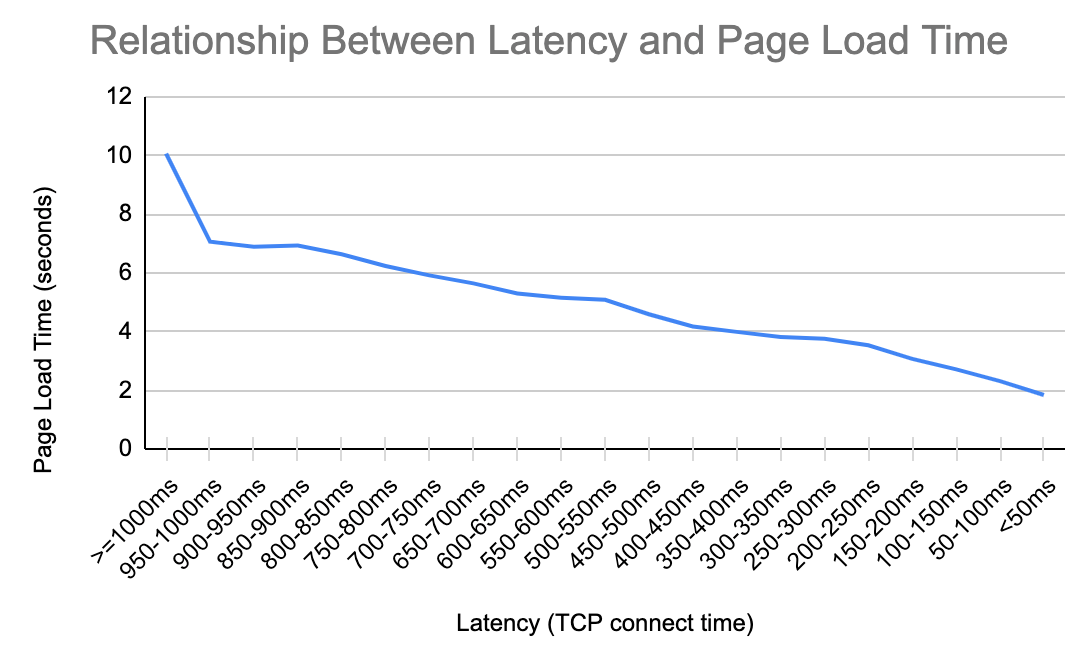

For the latency relationship, we use aggregate and anonymized data from Cloudflare’s own delivery network. It’s a familiar pattern. For every 200 milliseconds of latency we can save, we cut the page load time by over 1 second. That relationship applies when the latency is 950 milliseconds. And it applies when the latency is 50 milliseconds.

Let’s try to understand why this relationship exists. When you connect to a website, the first thing that your browser does is encrypt the connection and make sure the site you want to access is authenticated to be the site you think you’re accessing. This process is called the TLS establishment and SSL handshake. Before you even see a pixel of content, your browser and the website will exchange information up to seven times: three to perform TLS establishment, and three more to perform the SSL handshake.

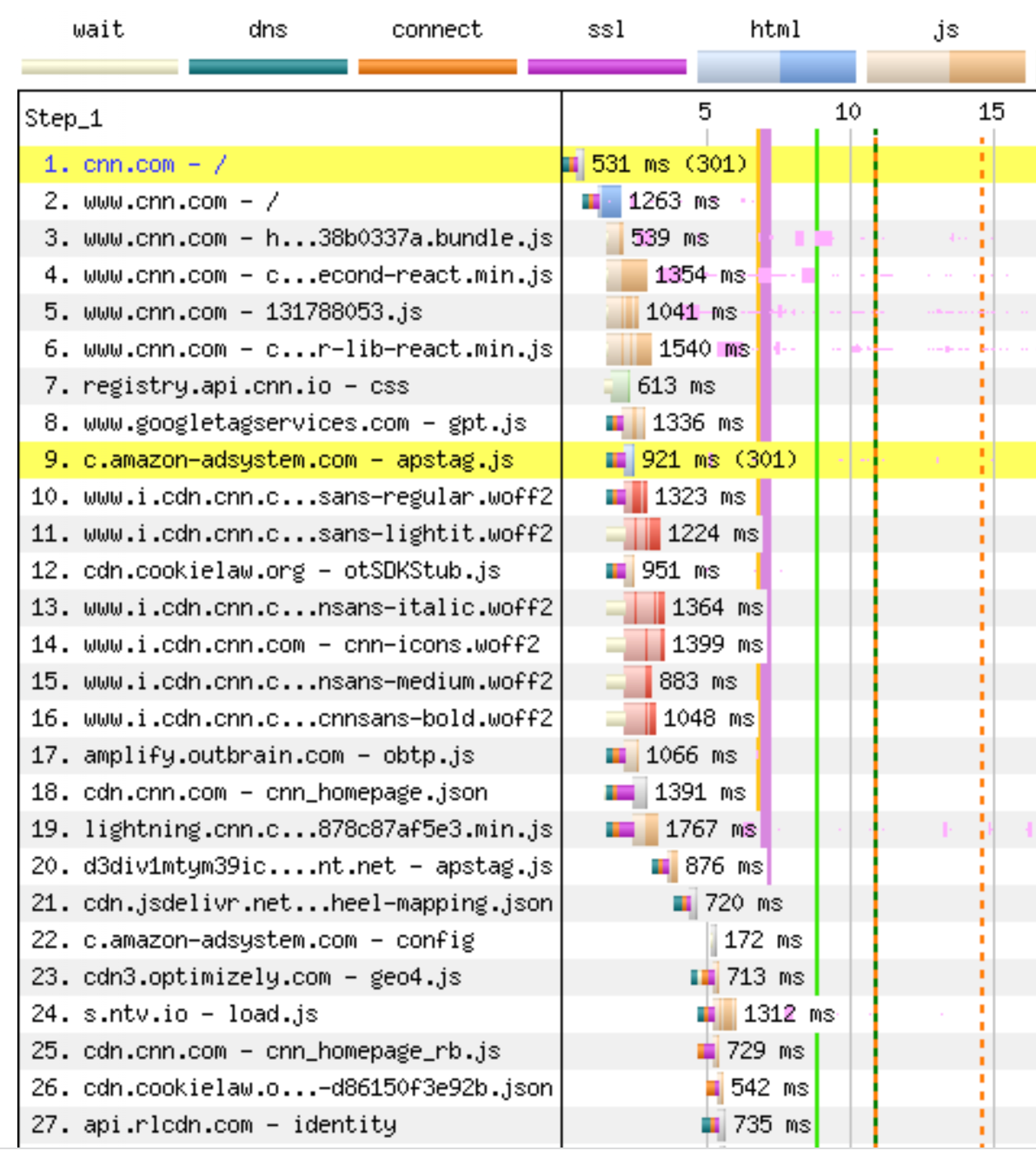

On top of that, when we load a webpage after we establish encryption and verify website authority, we might be asking the browser to load hundreds of different files across dozens of different domains. Some of these files can be loaded in parallel, but others need to be loaded sequentially. As the browser races to compile all these different files, it’s the speed at which it can get to the server and back that determines how fast it can put the page together. The files are often quite small, but there’s a lot of them.

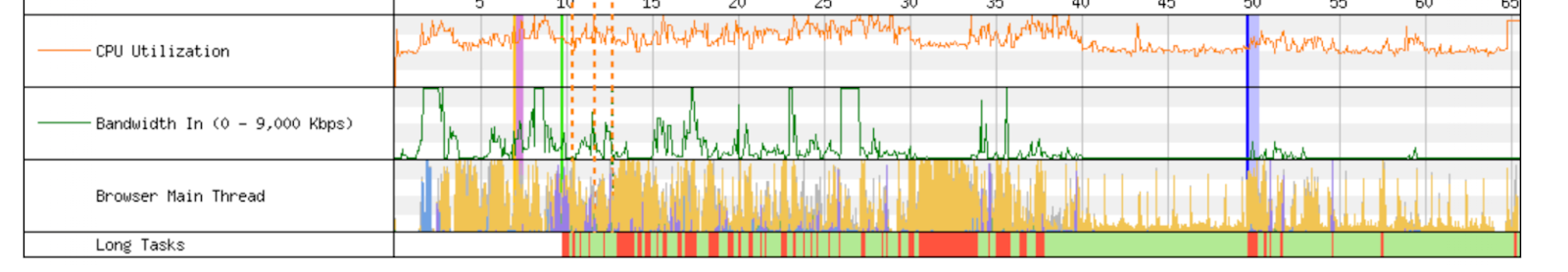

The chart below shows the beginning of what the browser does when it loads cnn.com. After a 301 redirect, the browser loads the main HTML page in step two. Only then, more than 1 second into the load, does it know about all the javascript files it needs to render the page. Files 3-19 become unblocked once the browser has the HTML file in step two, but we see more blocking later on. Files 20-27 are all blocked on earlier files. They can’t start until the browser has the previous file back from the server. There are 650 assets in this page load, and the blocking happens all the way through the page load. Here’s why this matters: better latency makes every file load faster, which in turn unblocks other files faster, and so on.

The browser will do its best to use all the bandwidth available, but often is using just a fraction of what’s available. It’s no wonder then that adding more bandwidth doesn’t speed up the page load, but better latency does. While developments like Early Hints help this by allowing browsers to pre-fetch files and content that don’t need to be serialized, this is still a problem for many websites on the Internet today.

Recently, Internet researchers have turned their attention to using our understanding of the relationship between throughput and latency to improve Internet Quality of Experience (QoE). A paper from the Broadband Internet Technical Advisory Group (BITAG) summarizes:

But we now recognize that it is not just greater throughput that matters, but also consistently low latency. Unfortunately, the way that we’ve historically understood and characterized latency was flawed, and our latency measurements and metrics were not aligned with end-user QoE.

There’s a difference between latency on an idle Internet connection and latency measured in working conditions, which we call “working latency” or “responsiveness”. Since responsiveness is what the user experiences as the speed of their Internet connection, it’s important to understand and measure this particular latency.

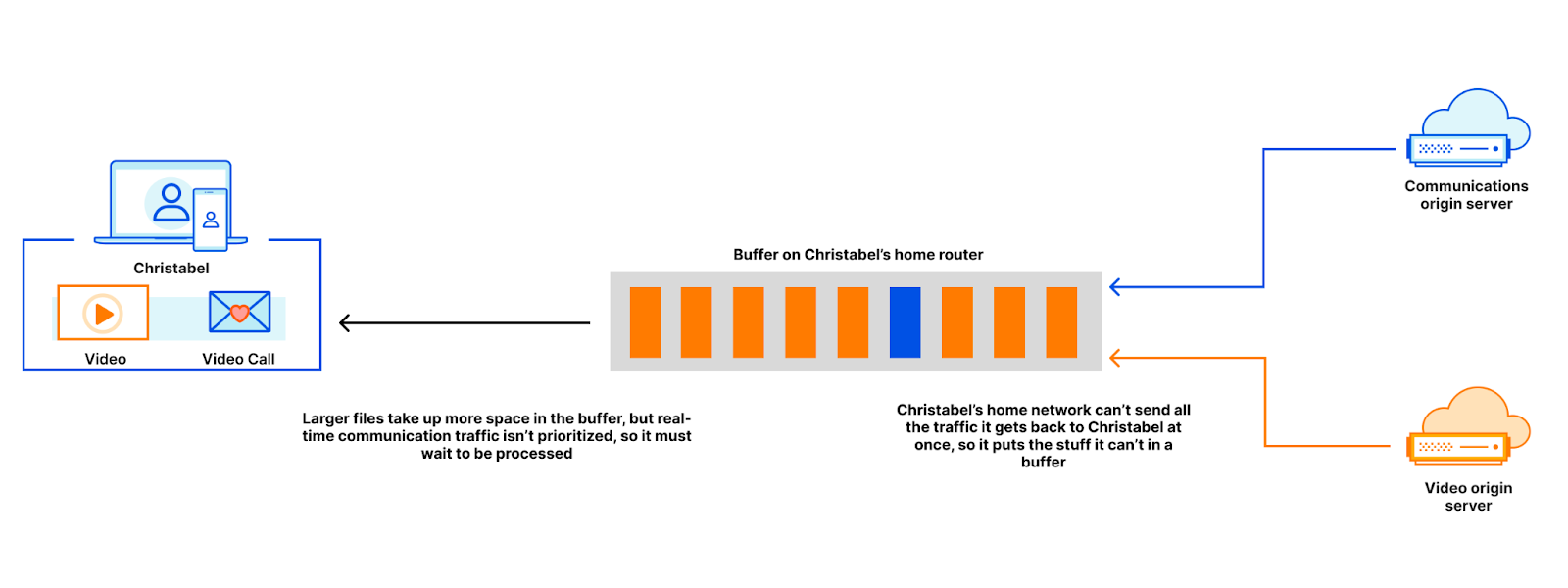

An Internet connection can suffer from poor responsiveness (even if it has good idle latency) when data is delayed in buffers. If you download a large file, for example an operating system update, the server sending the file might send the file with higher throughput than the Internet connection can accept. That’s ok. Extra bits of the file will sit in a buffer until it’s their turn to go through the funnel. Adding extra lanes to the highway allows more cars to pass through, and is a good strategy if we aren’t particularly concerned with the speed of the traffic.

Say for example, Christabel is watching a stream of the news while on a video meeting. When Christabel starts watching the video, her browser fetches a bunch of content and stores it in various buffers on the way from the content host to the browser. Those same buffers also contain data packets pertaining to the video meeting Christabel is currently in. If the data generated as part of a video conference sits in the same buffer as the video files, the video files will fill up the buffer and cause delay for the video meeting packets as well. That type of buffering delay is called bufferbloat, and leads to jittery video calls and delays where people speak over each other and get frustrated.

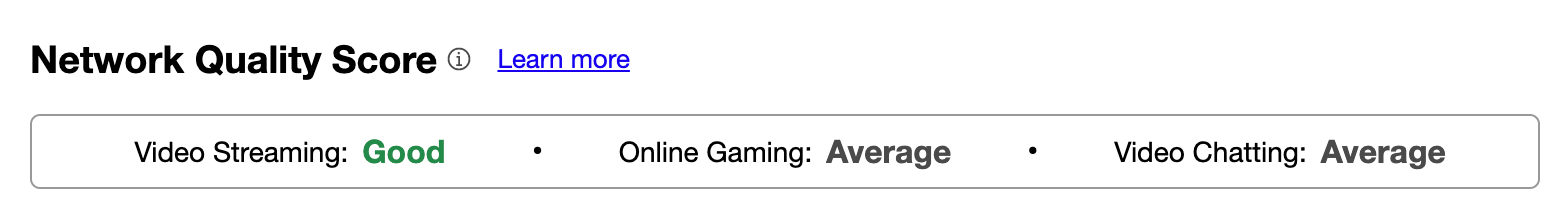

To help users understand the strengths and weaknesses of their connection, we recently added Aggregated Internet Measurement (AIM) scores to our own “Speed” Test. These scores remove the technical metrics and give users a real-world, plain-English understanding of what their connection will be good at, and where it might struggle. We’d also like to collect more data from our speed test to help track Page Load Times (PLT) and see how they are correlated with the reduction of lower working latency. You’ll start seeing those numbers on our speed test soon!

We all use our Internet connections in slightly different ways, but we share the desire for our connections to be as fast as possible. As more and more services move into the cloud – word documents, music, websites, communications, etc – the speed at which we can access those services becomes critical. While bandwidth plays a part, the latency of the connection – the real Internet “speed” – is more important.

At Cloudflare, we’re working every day to help build a more performant Internet. Want to help? Apply for one of our open engineering roles here.

We protect entire corporate networks, help customers build Internet-scale applications efficiently, accelerate any website or Internet application, ward off DDoS attacks, keep hackers at bay, and can help you on your journey to Zero Trust.

Visit 1.1.1.1 from any device to get started with our free app that makes your Internet faster and safer.

To learn more about our mission to help build a better Internet, start here. If you're looking for a new career direction, check out our open positions.