Most of (anti-) malware researchers focus on malware samples, because… it’s only natural 2022-3-5 07:27:20 Author: www.hexacorn.com(查看原文) 阅读量:44 收藏

Most of (anti-) malware researchers focus on malware samples, because… it’s only natural in this line of work. For a while now I try to focus on the opposite – the good, ‘clean’ files (primarily PE file format). While it may sound boring&mundane, maybe even somehow trivial, this is actually a very difficult task!

Why? Hear me out!

There are no samplesets available out there (at least that I know of), and any samplesets expire really fast (who cares about drivers for XP or Vista, or even any 32-bit files anymore). The ‘availability’ bit is tricky too (apart from some drivers distros originating mostly from Russia it’s hard to download anything ‘in bulk’), and yes… in the end you are pretty much on your own when you want to collect some new ‘good’ samples…

And ‘good’ companies generate a lot of these… and many of them are not even interesting for us, and… you may ask yourself… what all these good files are really good for?

From the offensive perspective — it’s easy: find good files, see if they are vulnerable, find these that are, write POC exploits & either submit CVEs or sell 0days to exploit brokers. Oh wait, it’s not really ‘good’, is it? Let’s sit that one on a fence for the time being.

What about ‘le’ defense?

Basic analysis of any clean Windows sampleset from last 20 years can tell us that the most common number of PE sections inside these ‘good’ executable files is 5 (31%). Followed by 4 and 6 (both 13%), then 3 (12%) and 2 (10%), and 1 (5%).

Note: like with all statistics, these % numbers are not to be trusted, because it’s from a relatively small set of clean files, many of which are from the PAST (2000-2020). Still, it’s something we can at least initiate a conversation with, right? And I doubt the percentages will vary much in larger samplesets, because good files are what they are — something that is a product of compilers and they tend to follow a template…

We can exploit that. And we should.

My hypothesis is that no matter what cluster of samples we look at, most of good PE files will oscillate around that 5 PE sections mark by default. Oh… wait.. Newer compilers may actually shift that number a bit higher – this is because of inclusion of additional sections that we now see added ‘by default’ e.g. ‘.pdata’ and ‘.didat’ sections. And to bring up a good example here — Windows 10’s Calculator (stub) has 5, and Notepad has 7 sections.

So… 5..7 range it is.

Anything outside of it is probably… mildly interesting. Why ‘mildly’? These numbers are good for Microsoft compilers/PE files, but files built with non-Microsoft compilers will have to fall into a different bucket. Compiler detection is critical here and only if we do so, we can correlate average number of PE sections in ‘good’ files generated by that specific compiler. Think Delphi/Embarcadero, mingw, Go, Nim, Zig, Rust, PyInstaller, etc.. Non-trivial 🙁

PE Sections are for beginners tho. Really no point spending much time on them, because the PE profiling landscape has changed a lot over last 15 years. Luckily, there are many other properties of ‘good’ files that are worth discussing.

First, the PDB paths. Same as with malware, we can collect a large corpora of these and create a cluster of ‘reverse-logic’ yara rules. That is, if the yara hits and the file contains one of the legitimate-looking PDB file names/paths it is most likely good! It is a terribly naive assumption of course, I mean to believe that all files with legitimate PDB paths are good, but… why not, for starters.

For instance, if the file is not detected by any AV, and contains unique PDB strings that look like one of these clean PDB paths (on a curated list) then it’s highly possible it is, indeed a clean one! Right?

And together with other characteristic of good PE files we may craft a little bit more complex yara rules that could all be good indicators of file goodness w/o losing flexibility (e.g. work across multiple versions of the same file).

I don’t want to burn ‘good’ yara rules in public, because this kills the whole idea described above, so I won’t be posting too many examples (more about it later as well), but let’s have a look at this PDB path:

If it is was malware, you would write a Yara signature for it, right?

You can do the same for ‘good’ files.

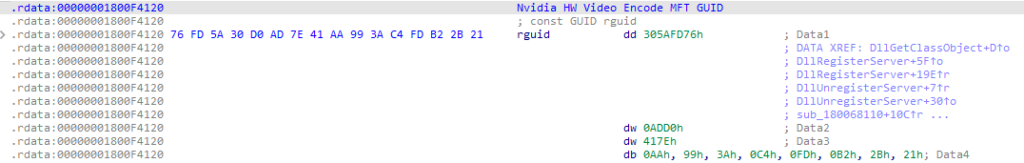

The second idea is focused on GUIDs.

Many clean files come as COM libraries and their GUIDs referenced by their type libraries are unique. One could create another set of ‘reverse-logic’ yara rules for these, and this way discover ‘good, clean files that reference them. It could be an occurrence of GUID in a string format, either ANSI or Unicode as well as its binary representation.

Again, the assumption here is that bad guys don’t use the same GUIDs in their poly-/meta-morphic generators (yet). See it for yourself – the below GUID has only few Google hits and (until this post) was a good indicator of ‘goodness’:

Next, we can look at resources. Many legitimate executable files embed ‘branded’ icons. We know these are already being leveraged by some bad guys, but having a large set of these ‘good’ icons extracted from many clean samples can help to push samples that include them into different pipelines.

And these, especially, if matched with characteristics of import/export tables, their hashes, or other basic file properties like size, number of sections and their names, or even a matching subset of strings, plus version information, number of localized strings, entropy, signatures, etc. can form unique descriptors of ‘goodness’.

Can these be abused? 100% . This is why I am not making results of this research public.

And it is for sure that ‘good’ files follow patterns, they don’t change that much and we can exploit that. And since these properties can be extracted automatically, this is in fact, a great place for machine learning (unlike actual malware)! What if what we need is a ML/AI algo that learns from ‘good files’ ? Yes, it’s not a new concept, but how much of this kinda research is actually made public (especially the algorithmic part)? With this series I plan to bring some of my personal research to the public eye with a hope it can inspire more work in this space.

And coming back to what I mentioned earlier – I do face a dilemma. I have collected many of these artifacts and statistics during my spelunking, but I don’t think it’s a good idea to share them publicly. I think there actually is a scope for a DST debate same as there is for OST and at this moment in time I believe some artifacts or their collections, “defensive” findings if you will, should be shared within trusted circles only!!!

Yes, it’s a 180 degree change of my stance compared to say 10 years ago, but we live in strange times. If I publish a list of all clean PDB paths, clean GUIDs, clusters of legitimate icons it’s a given that the next generation of malware will immediately re-use them in their creations! And some Red Teams may use that too.

So, it’s a NO.

I am also worried about unfair players in the corporate space who will simply acquire this data for free and use it in their commercial offerings, both on defensive and offensive side.

So, this is a No, too.

Yup, good sharing times are over, sorry.

Where does it leave us?

As usual, the metadata ownership is the king. For instance, as of this moment I got 450K good PDB file paths that can be turned into ‘reverse-logic’, good yara rules with a simple script. The same goes with many other ‘good’ artifacts f.ex. collection of ‘good’ icon files (also ~450K at the moment) – yes, these too, can be converted into ‘good’ yara rules, or at least — recognized ‘ico’ files for a different pipeline processing.

Do you want it?

If you process a lot of samples and you think you may benefit from excluding good files by using such ‘reverse-logic’ yara rules you can contact me from your legitimate company email address. If you do not plan to resell this data, or are NPO/NGO I will happily share it with you for free. And, if you want to benefit from it commercially and use it in your products or investigations, I will require a one-off payment – this is only to support my infrastructure and purchase of extra HDD space for further sample collection and analysis.

Contact me with some details on how you would like to use this data!

如有侵权请联系:admin#unsafe.sh